LXC : Install and configure the Linux Containers

Contents

- 1 Introduction

- 2 Installation

- 3 Configuration

- 4 Basic Usage

- 4.1 Create a container

- 4.2 List containers

- 4.3 Start a container

- 4.4 Plug to console

- 4.5 Stop a container

- 4.6 Force shutdown

- 4.7 Autostart on boot

- 4.8 Delete a container

- 4.9 Monitoring

- 4.10 Freeze

- 4.11 Unfreeze/Restore

- 4.12 Monitor changes

- 4.13 Trigger changes

- 4.14 Launch command in a running container

- 5 Convert/Migrate a VM/Host to a LXC container

- 6 Container configuration

- 7 FAQ

- 7.1 How could I know if I'm in a container or not ?

- 7.2 Can't connect to console

- 7.3 Can't create a LXC LVM container

- 7.4 Can't limit container memory or swap

- 7.5 I can't start my container, how could I debug ?

- 7.6 /usr/sbin/grub-probe: error: cannot find a device for / (is /dev mounted?)

- 7.7 telinit: /run/initctl: No such file or directory

- 7.8 Some containers are loosing their IP addresse at boot

- 7.9 OpenVPN

- 7.10 LXC inception or Docker in LXC

- 7.11 LXC control device mapper

- 8 References

|

|

| Software version | 0.8 |

|---|---|

| Operating System | Debian 7 |

| Website | LXC Website |

| Last Update | 22/02/2015 |

| Others | |

1 Introduction

LXC is the userspace control package for Linux Containers, a lightweight virtual system mechanism sometimes described as “chroot on steroids”.

LXC builds up from chroot to implement complete virtual systems, adding resource management and isolation mechanisms to Linux’s existing process management infrastructure.

Linux Containers (lxc) implement:

- Resource management via “process control groups” (implemented via the cgroup filesystem)

- Resource isolation via new flags to the clone(2) system call (capable of create several types of new namespaces for things like PIDs and network routing)

- Several additional isolation mechanisms (such as the “-o newinstance” flag to the devpts filesystem).

The LXC package combines these Linux kernel mechanisms to provide a userspace container object, a lightweight virtual system with full resource isolation and resource control for an application or a system.

Linux Containers take a completely different approach than system virtualization technologies such as KVM and Xen, which started by booting separate virtual systems on emulated hardware and then attempted to lower their overhead via paravirtualization and related mechanisms. Instead of retrofitting efficiency onto full isolation, LXC started out with an efficient mechanism (existing Linux process management) and added isolation, resulting in a system virtualization mechanism as scalable and portable as chroot, capable of simultaneously supporting thousands of emulated systems on a single server while also providing lightweight virtualization options to routers and smart phones.

The first objective of this project is to make the life easier for the kernel developers involved in the containers project and especially to continue working on the Checkpoint/Restart new features. The lxc is small enough to easily manage a container with simple command lines and complete enough to be used for other purposes.[1]

2 Installation

To install LXC, we do not need too much packages. As I will want to manage my LXC containers with libvirt, I need to install it as well :

| |

aptitude install lxc bridge-utils debootstrap git git-core |

At the time where I write this sentence, there is an issue with LVM container creation (here is a first Debian bug and a second one) on Debian Wheezy and it doesn't seams to be resolve soon.

Here is a workaround to avoid errors during LVM containers initialization :

On Jessie, you'll also have to install this:

| |

aptitude install cgroupfs-mount |

2.1 Kernel

It's recommended to get a recent kernel as LXC grow very fast, get better performances, stabilities and new features. To get a newer kernel, we're going to use a kernel from the testing repo :

Add then this testing content :

| |

# Testing deb http://ftp.fr.debian.org/debian/ testing main non-free contrib deb-src http://ftp.fr.debian.org/debian/ testing main non-free contrib |

Then you can install the latest kernel image :

| |

aptitude update aptitude install linux-image-amd64 |

If it's not enough, you'll need to install the package with specific kernel version number corresponding to latest (ex. linux-image-3.11-2-amd64) and reboot on this new kernel.

3 Configuration

3.1 Cgroups

LXC is based on cgroups. Those are used to limit CPU, RAM etc...You can check here for more informations.

We need to enable Cgroups. Add this line in fstab :

| |

[...] cgroup /sys/fs/cgroup cgroup defaults 0 0 |

As we want to manage memory and swap on containers, as it's not available by default, add cgroup argument to grub to activate those functionality :

- cgroup RAM feature : "cgroup_enable=memory"

- cgroup SWAP feature : "swapaccount=1"

Then regenerate grub config :

| |

update-grub |

| |

| You'll need to reboot to make the cgroup memory feature active |

Then mount it :

| |

mount /sys/fs/cgroup |

3.2 Check LXC configuration

| |

| All should be enabled to ensure it will work as expected ! |

3.3 Network

3.3.1 No specific configuration (same than host)

If you don't configure your network configuration after container initialization, you'll have the exact same configuration on your guests (containers) than your host. That mean all network interfaces are available on the guests and they will have full access to the host.

| |

| This is not the recommended solution for production usages |

- The pro of that "no" configuration, is to have network working out of the box for the guests (perfect for quick tests)

- Another con, is to have the access to process on host. I mean that a SSH server running on host will have it's port available on the guest too. So you cannot have a SSH server running on guests without changing port (or you'll have a network binding conflict).

You can easily check this configuration in opening a port on the host (here 80) :

| |

nc -lp 80 |

now on a guest, you can see it listening :

| |

> netstat -aunt | grep 80 tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN |

3.3.2 Nat configuration

How does it works for NAT configuration ?

- You need to choose which kind of configuration you want to use : libvirt or dnsmaq

- Iptables : to help to access nated containers from outside and help containers to get internet

- Configure the network container

3.3.2.1 With Libvirt

You'll need to install libvirt first :

| |

aptitude install libvirt-bin |

Nat is the default configuration. But you may need to do some adjustements. Add the forwarding to sysctl :

| |

net.ipv4.ip_forward = 1 |

Check that the connecting is active :

| |

> virsh net-list --all Name State Autostart ----------------------------------------- default inactive no |

If it's not the case, then set the default network configuration :

| |

virsh net-define /etc/libvirt/qemu/networks/default.xml virsh net-autostart default virsh net-start default |

Then you should see it enable :

| |

> virsh net-list --all Name State Autostart ----------------------------------------- default active yes |

Edit the configuration to add your range of IP :

Now it's done, restart libvirt.

3.3.2.2 With dnsmasq

Libvirt is not necessary as for the moment it doesn't manage LXC containers very well. So you can manage your own dnsmasq server to give DNS and DHCP to your containers. First of all, install it :

| |

aptitude install dnsmasq dnsmasq-utils |

Then configure it :

Then restart the dnsmasq service. And now configure the lxcbr0 interface :

3.3.2.3 Iptables

You may need to configure iptables for example if you're on a dedicated box where the provider doesn't allow bridge configuration. Here is a working iptables configuration to permit incoming connexions to Nated guests :

3.3.2.4 Nat on containers

3.3.2.4.1 DHCP

On each containers you want to use NAT configuration, you need to add those lines for DHCP configuration[2] :

Then in the LXC container (mount the LV if you did LVM) configure the network like this :

| |

auto eth0 iface eth0 inet dhcp |

3.3.2.4.2 Static IP

| |

| This is only applicable for Libvirt |

You can also configure manual static IP if you want by changing 'lxc.network.ipv4'. Another elegant method is to ask DHCP to fix it :

| | ||

Do not forget to fix lxc.network.hwaddr parameter. Here is a way to generate mac address :

|

3.3.3 Private container interface

You can create a private interface for your containers. Containers will be able to communicate together though this dedicated interface. Here are the steps to create one between 2 hosts.

On the host server, install UML utilities :

| |

aptitude install uml-utilities |

Then edit the network configuration file and add a bridge :

| |

auto privbr0

iface privbr0 inet static

pre-up /usr/sbin/tunctl -t tap0

pre-up /sbin/ifup tap0

post-down /sbin/ifdown tap0

bridge_ports tap0

bridge_fd 0 |

You can restart your network or launch it manually if you can't restart now :

| |

tunctl -t tap0

brctl addbr privbr0

brctl addif privbr0 tap0 |

Then edit both containers that will have this dedicated interface and replace or add those lines :

| |

# Private interface lxc.network.type = veth lxc.network.flags = up lxc.network.link = privbr0 lxc.network.ipv4 = 10.0.0.1 |

Now start the container and you'll have the 10.0.0.X dedicated network.

3.3.4 Bridged configuration

Now modify your /etc/network/interfaces file to add bridged configuration :

br0 is replacing eth0 for bridging.

Here is another configuration with 2 network cards and 2 bridges :

3.3.5 VLAN Bridged configuration

And a last one with Vlans bridged (look at this documentation to enable it before) :

We will need to use etables (iptables for bridged interfaces). Install this :

| |

aptitude install ebtables |

Check you etables configuration :

| |

EBTABLES_LOAD_ON_START="yes" EBTABLES_SAVE_ON_STOP="yes" EBTABLES_SAVE_ON_RESTART="yes" |

And enable VLAN tagging on bridged interfaces :

| |

ebtables -t broute -A BROUTING -i eth0 -p 802_1Q -j DROP |

3.4 Security

It's recommended to use Grsecurity kernel (may be not compatible with the testing kernel)or Apparmor.

With Grsecurity, here are the parameters[3] :

4 Basic Usage

4.1 Create a container

4.1.1 Classic method

To create a container with a wizard :

| |

lxc-create -n mycontainer -t debian or lxc-create -n mycontainer -t debian-wheezy or lxc-create -n mycontainer -t debian-wheezy -f /etc/lxc/lxc-nat.conf |

- n : the name of the container

- t : the template of the container. You can find the list in this folder : /usr/share/lxc/templates

- f : the configuration template

It will deploy through debootstrap a new container.

If you want to plug yourself to a container through the console, your first need to create devices :

Then you'll be able to connect :

| |

lxc-console -n mycontainer |

4.1.2 LVM method

If you're using LVM to store your containers (strongly recommended), you can ask to LXC to auto create the logical volume and mkfs it for you :

| |

lxcname=mycontainerlvm lxc-create -t debian-wheezy -n $lxcname -B lvm --vgname lxc --lvname $lxcname --fssize 4G --fstype ext3 |

- t : specify the wished template

- B : we want to use LVM as backend (BTRFS is also supported)

- vgname : set the volume group (VG) name where logical volume (LV) should be created

- lvname : set the wished LV name for that container

- fssize : set the size of the LV

- fstype : set the filesystem for this container (full list is available in /proc/filesystems)

4.1.3 BTRFS method

If your host has a btrfs /var, the LXC administration tools will detect this and automatically exploit it by cloning containers using btrfs snapshots.[4]

4.1.4 Templating configuration

You can template configuration if you want to simplify your deployments. It could be useful if you need to do specific lxc configuration. To do it, simply create a file (name it as you want) and add your lxc configuration (here the network configuration) :

Then you could call it when you'll create a container with -f argument. You can create as many configuration as you want and place them were you want. I did it in /etc/lxc as I felt it well.

4.2 List containers

You can list containers :

| |

> lxc-list RUNNING mycontainer FROZEN STOPPED mycontainer2 |

If you want to list running containers :

| |

lxc-ls --active |

4.3 Start a container

To start a container as a deamon :

| |

lxc-start -n mycontainer -d |

- d : Run the container as a daemon. As the container has no more tty, if an error occurs nothing will be displayed, the log file can be used to check the error.

4.4 Plug to console

You can connect to the console :

| |

lxc-console -n mycontainer |

If you've problems to connect to the container, do this.

4.5 Stop a container

To stop a container :

| |

lxc-shutdown -n mycontainer or lxc-halt -n mycontainer |

| |

| You can't lxc-halt/lxc-shutdown on a container based on LVM in the current Debian version(Wheezy) |

4.6 Force shutdown

If you need to force a container to halt :

| |

lxc-stop -n mycontainer |

4.7 Autostart on boot

If you need to get LXC containers to autostart on boot, you'll need to create symlink :

| |

ln -s /var/lib/lxc/mycontainer/config /etc/lxc/auto/mycontainer |

4.8 Delete a container

You can delete a container like this :

| |

lxc-destroy -n mycontainer |

| |

| This will remove all your data as well. Do a backup before doing destroy ! |

4.9 Monitoring

If you want to know the state of a container :

| |

lxc-info -n mycontainer state: RUNNING pid: 3034 |

Available state are :

- ABORTING

- RUNNING

- STARTING

- STOPPED

- STOPPING

4.10 Freeze

If you want to freeze (suspend like) a container :

| |

lxc-freeze -n mycontainer |

4.11 Unfreeze/Restore

If you want to unfreeze (resume/restore like) a container :

| |

lxc-unfreeze -n mycontainer |

4.12 Monitor changes

You can monitor changes of a container with lxc-monitor :

You can see all container changing states.

4.13 Trigger changes

You can also use 'lxc-wait command with '-s' parameter to wait a specific state and execute something afterward :

| |

lxc-wait -n mycontainer -s STOPPED && echo "Container stopped" | mail -s 'You need to restart it' deimos@deimos.fr |

4.14 Launch command in a running container

You can launch a command in a running container without being inside it :

| |

lxc-attach -n mycontainer -- /etc/init.d/cron restart |

This restart the cron service in "mycontianer" container.

5 Convert/Migrate a VM/Host to a LXC container

If you already have a running machine on KVM/VirtualBox or anything else and want to convert to an LXC container, it's easy. I've wrote a script (strongly inspired from the lxc-create) that helps me to initiate the missing elements. You can copy it in /usr/bin folder :

To use it, it's easy. First of all mount or copy all your datas in the rootfs folder, be sure to have enough space, then launch the lxc-convert script like in this example :

Adapt the remote host to your distant SSH host or rsync without SSH if it's possible. During the transfer, you need to exclude some folders to avoid errors (/proc, /sys, /dev). They will be recreated during the lxc-convert.

Then you'll be able to start it :-)

6 Container configuration

Once you've initialized your container, there are a lot of interesting options. Here are some for a classical configuration :

For an LVM configuration :

6.1 Architectures

You can set the container architecture on a container. For example, you can use an x86 container on a x64 kernel :

| |

lxc.arch=x86 |

6.2 Capabilities

You can specify the capability to be dropped in the container. A single line defining several capabilities with a space separation is allowed. The format is the lower case of the capability definition without the "CAP_" prefix, eg. CAP_SYS_MODULE should be specified as sys_module. You can see the complete list of linux capabilities with explanations by reading the man page :

| |

man 7 capabilities |

6.3 Devices

You can manage (allow/deny) accessible devices directly from your containers. By default, everything is disabled :

| |

## Devices # Allow all devices #lxc.cgroup.devices.allow = a # Deny all devices lxc.cgroup.devices.deny = a |

- a : means all devices

You can then allow some of them easily[5] :

| |

lxc.cgroup.devices.allow = c 5:1 rwm # dev/console lxc.cgroup.devices.allow = c 5:0 rwm # dev/tty lxc.cgroup.devices.allow = c 4:0 rwm # dev/tty0 |

To get the complete list of allowed devices :

| |

lxc-cgroup -n mycontainer devices.list |

To get a better understanding of this, here are explanations[6] :

lxc.cgroup.devices.allow = <type> <major>:<minor> <perm>

- <type> : b (block), c (char), etc ...

- <major> : major number

- <minor> : minor number (wildcard is accepted)

- <perms> : r (read), w (write), m (mapping)

6.4 Container limits (cgroups)

If you've never played with Cgroups, look at my documentation. With LXC, here are the available ways to setup cgroups to your containers :

- You can change cgroups values with lxc-cgroup command (on the fly):

| |

lxc-cgroup -n <container_name> <cgroup-name> <value> |

- You can directly play with /proc (on the fly):

| |

echo <value> > /sys/fs/cgroup/lxc/<container_name>/<cgroup-name> |

- And set it directly in the config file (persistent way) :

| |

lxc.cgroup.<cgroup-name> = <value> |

Cgroups can be changed on the fly.

| |

|

You should warn when you reduce some of them, especially the memory (be sure that you do not reduce more than used). |

If you want to see available cgroups for a container :

6.4.1 CPU

6.4.1.1 CPU Pining

If you want to bind some CPU/Cores to a VM/Container, there is a solution called CPU Pining :-). First, look at the available cores on your server :

You can see there are 7 cores (called processor). In fact there are 4 cores with 2 thread each on this CPU. That's why there are 4 cores id and 8 detected cores.

So here is the list of the cores with their attached core :

core id 0 : processors 0 and 4 core id 1 : processors 1 and 5 core id 2 : processors 2 and 6 core id 3 : processors 3 and 7

Now if I want to dedicate a set of cores to a container :

| |

lxc.cgroup.cpuset.cpus = 0-3 |

This will add the 4 firsts cores to the container. Then if I only want CPU 1 and 3 :

| |

lxc.cgroup.cpuset.cpus = 1,3 |

6.4.1.1.1 Check CPU assignments

You can check how many CPU Pining are working for a container. On the host file, launch "htop" for example and in the container launch stress :

| |

aptitude install stress stress --cpu 2 --timeout 10s |

This will stress 2 CPU at 100% for 10 seconds. You'll see your htop CPU bars at 100%. If I change 2 by 3 and only binded 2 CPUs, only 2 will be at 100% :-)

6.4.1.2 Scheduler

This is the other method to assign CPU to a container. You need to add weight to VMs so that the scheduler can decide which container should use CPU time form the CPU clock. For instance, if a container is set to 512 and another to 1024, the last one will have twice more CPU time than the first container. To edit this property :

| |

lxc.cgroup.cpu.shares = 512 |

If you need more documentation, look at the kernel page[7].

6.4.2 Memory

You can limit the memory in a container like this :

| |

lxc.cgroup.memory.limit_in_bytes = 128M |

If you've got error when trying to limit memory, check the FAQ.

6.4.2.1 Check memory from the host

You can check memory from the host like that[8] :

- Current memory usage:

| |

cat /sys/fs/cgroup/lxc/mycontainer/memory.usage_in_bytes |

- Current memory + swap usage:

| |

cat /sys/fs/cgroup/lxc/mycontainer/memory.memsw.usage_in_bytes |

- Maximum memory usage:

| |

cat /sys/fs/cgroup/lxc/mycontainer/memory.max_usage_in_bytes |

- Maximum memory + swap usage :

| |

cat /sys/fs/cgroup/lxc/mycontainer/memory.memsw.max_usage_in_bytes |

Here is an easier solution to read informations :

| |

awk '{ printf "%sK\n", $1/ 1024 }' /sys/fs/cgroup/lxc/mycontainer/memory.usage_in_bytes awk '{ printf "%sM\n", $1/ 1024 / 1024 }' /sys/fs/cgroup/lxc/mycontainer/memory.usage_in_bytes |

6.4.2.2 Check memory in the container

The actual problem is you can't check how many memory you've set and is available for your container. For the moment /proc/meminfo is not correctly updated[9]. If you need to validate the available memory on a container, you have to write fake data into the allocated memory area to trigger the memory checks of the kernel/visualization tool.

Memory overcommit is a Linux kernel feature that lets applications allocate more memory than is actually available. The idea behind this feature is that some applications allocate large amounts of memory just in case, but never actually use it. Thus, memory overcommit allows you to run more applications than actually fit in your memory, provided the applications don’t actually use the memory they have allocated. If they do, then the kernel (via OOM killer) terminates the application.

Here is the code[10] :

Then compil it (with gcc) :

| |

aptitude install gcc gcc memory_allocation.c -o memory_allocation |

You can now run the test :

6.4.3 SWAP

You can limit the swap in a container like this :

| |

lxc.cgroup.memory.memsw.limit_in_bytes = 192M |

| |

| This limit is not only SWAP but Memory + SWAP |

That mean that "lxc.cgroup.memory.memsw.limit_in_bytes" should be at least equal to "lxc.cgroup.memory.limit_in_bytes".

If you've got error when trying to limit swap, check the FAQ.

6.4.4 Disks

By default, LXC doesn't provide any disks limitation. Anyway, there are enough solution today to make that kind of limitations :

- LVM : create one LV per container

- BTRFS : using integrated BTRFS quotas

- ZFS : if you're using ZFS on Linux, you can use integrated zfs/zpool quotas

- Quotas : using classical Linux quotas (not the recommended solution)

- Disk image : you can use QCOW/QCOW2/RAW/QED images

6.4.4.1 Mount

| |

| You should take care if you want to create a mount entry in a subdirectory of /mnt. |

It won't work so easily. The reason this happens is that by default 'mnt' is the directory used as pivotdir, where the old_root is placed during pivot_root(). After that, everything under pivotdir is unmounted.

A workaround is to specify an alternate 'lxc.pivotdir' in the container configuration file.[11]

6.4.4.1.1 Block Device

You can mount block devices in adding in your container configuration lines like this (adapt with your needs) :

| |

lxc.mount.entry = /dev/sdb1 /var/lib/lxc/mycontainer/rootfs/mnt ext4 rw 0 2 |

6.4.4.1.2 Bind mount

You also can mount bind mountpoints like that (adapt with your needs) :

| |

lxc.mount.entry = /path/in/host/mount_point /var/lib/lxc/mycontainer/rootfs/mount_moint none bind 0 0 |

6.4.4.2 Disk priority

You can set disk priority like that (default is 500):

| |

lxc.cgroup.blkio.weight = 500 |

Higher the value is, more the priority will be important. You can get more informations here. Maximum value is 1000 and lowest is 10.

| |

| You need to have CFQ scheduler to make it work properly |

6.4.4.3 Disk bandwidth

Another solution is to limit bandwidth usage, but the Wheezy kernel doesn't have the "CONFIG_BLK_DEV_THROTTLING" activated. You need to take a testing/unstable kernel instead or recompile a new one with this option activated. To do this follow the kernel procedure.

Then, you'll be able to limit bandwidth like that :

| |

# Limit to 1Mb/s lxc.cgroup.blkio.throttle.read_bps_device = 100 |

6.4.5 Network

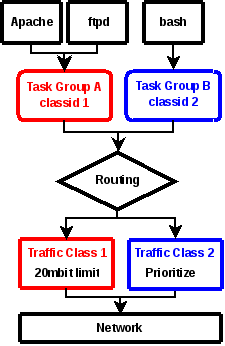

You can limit network bandwidth using native kernel QOS directly on cgroups. For example, we have 2 containers : A and B. To get a good understanding, look at this schema :

Now you've understand how it could looks like. Now if I want to limit a container to 30Mb and the other one to 40Mb, here is how I should achieve it. Assign IDs on containers that should have quality of service :

| |

echo 0x1001 > /sys/fs/cgroup/lxc/<containerA>/net_cls.classid echo 0x1002 > /sys/fs/cgroup/lxc/<containerB>/net_cls.classid |

- 0x1001 : corresponding to 10:1

- 0x1002 : corresponding to 10:2

Then select the desired QOS algorithm (HTB) :

| |

tc qdisc add dev eth0 root handle 10: htb |

Choose the desired bandwidth on containers IDs :

| |

tc class add dev eth0 parent 10: classid 10:1 htb rate 40mbit tc class add dev eth0 parent 10: classid 10:2 htb rate 30mbit |

Enable filtering :

| |

tc filter add dev eth0 parent 10: protocol ip prio 10 handle 1: cgroup |

6.5 Resources statistics

Unfortunately, you can't have informations directly on the containers, however you can have informations from the host. Here is a little script to do it:

Here is the result:

7 FAQ

7.1 How could I know if I'm in a container or not ?

There's an easy way to know that :

| |

> cat /proc/$$/cgroup 1:perf_event,blkio,net_cls,freezer,devices,memory,cpuacct,cpu,cpuset:/lxc/mycontainer |

You can see in the cpuset, the container name where I am ("mycontainer" here).

7.2 Can't connect to console

If you want to plug yourself to a container through the console, your first need to create devices :

Then you'll be able to connect :

| |

lxc-console -n mycontainer |

7.3 Can't create a LXC LVM container

If you get this kind of error during LVM :

Copying local cache to /var/lib/lxc/mycontainerlvm/rootfs.../usr/share/lxc/templates/lxc-debian: line 101: /var/lib/lxc/mycontainerlvm/rootfs/etc/apt/sources.list.d/debian.list: No such file or directory /usr/share/lxc/templates/lxc-debian: line 107: /var/lib/lxc/mycontainerlvm/rootfs/etc/apt/sources.list.d/debian.list: No such file or directory /usr/share/lxc/templates/lxc-debian: line 111: /var/lib/lxc/mycontainerlvm/rootfs/etc/apt/sources.list.d/debian.list: No such file or directory /usr/share/lxc/templates/lxc-debian: line 115: /var/lib/lxc/mycontainerlvm/rootfs/etc/apt/sources.list.d/debian.list: No such file or directory /usr/share/lxc/templates/lxc-debian: line 183: /var/lib/lxc/mycontainerlvm/rootfs/etc/fstab: No such file or directory mount: mount point /var/lib/lxc/mycontainerlvm/rootfs/dev/pts does not exist mount: mount point /var/lib/lxc/mycontainerlvm/rootfs/proc does not exist mount: mount point /var/lib/lxc/mycontainerlvm/rootfs/sys does not exist mount: mount point /var/lib/lxc/mycontainerlvm/rootfs/var/cache/apt/archives does not exist /usr/share/lxc/templates/lxc-debian: line 49: /var/lib/lxc/mycontainerlvm/rootfs/etc/dpkg/dpkg.cfg.d/lxc-debconf: No such file or directory /usr/share/lxc/templates/lxc-debian: line 55: /var/lib/lxc/mycontainerlvm/rootfs/usr/sbin/policy-rc.d: No such file or directory chmod: cannot access `/var/lib/lxc/mycontainerlvm/rootfs/usr/sbin/policy-rc.d': No such file or directory chroot: failed to run command `/usr/bin/env': No such file or directory chroot: failed to run command `/usr/bin/env': No such file or directory chroot: failed to run command `/usr/bin/env': No such file or directory umount: /var/lib/lxc/mycontainerlvm/rootfs/var/cache/apt/archives: not found chroot: failed to run command `/usr/bin/env': No such file or directory chroot: failed to run command `/usr/bin/env': No such file or directory chroot: failed to run command `/usr/bin/env': No such file or directory chroot: failed to run command `/usr/bin/env': No such file or directory chroot: failed to run command `/usr/bin/env': No such file or directory chroot: failed to run command `/usr/bin/env': No such file or directory chroot: failed to run command `/usr/bin/env': No such file or directory chroot: failed to run command `/usr/bin/env': No such file or directory umount: /var/lib/lxc/mycontainerlvm/rootfs/dev/pts: not found umount: /var/lib/lxc/mycontainerlvm/rootfs/proc: not found umount: /var/lib/lxc/mycontainerlvm/rootfs/sys: not found 'debian' template installed Unmounting LVM 'mycontainerlvm' created

this is because of a Debian bug that the maintainer doesn't want to fix :-(. Here is a workaround.

7.4 Can't limit container memory or swap

If you can't limit container memory and have this kind of issue :

| |

> lxc-cgroup -n mycontainer memory.limit_in_bytes "128M" lxc-cgroup: cgroup is not mounted lxc-cgroup: failed to assign '128M' value to 'memory.limit_in_bytes' for 'mycontainer' |

This is because cgroup memory capability is not loaded from your kernel. You can check it like that :

| |

> cat /proc/cgroups

#subsys_name hierarchy num_cgroups enabled

cpuset 1 4 1

cpu 1 4 1

cpuacct 1 4 1

memory 0 1 0devices 1 4 1

freezer 1 4 1

net_cls 1 4 1

blkio 1 4 1

perf_event 1 4 1 |

As we want to manage memory and swap on containers, as it's not available by default, add cgroup argument to grub to activate those functionality :

- cgroup RAM feature : "cgroup_enable=memory"

- cgroup SWAP feature : "swapaccount=1"

Then regenerate grub config :

| |

update-grub |

Now reboot to make changes available.

After reboot you can check that memory is activated :

| |

> cat /proc/cgroups

#subsys_name hierarchy num_cgroups enabled

cpuset 1 6 1

cpu 1 6 1

cpuacct 1 6 1

memory 1 6 1devices 1 6 1

freezer 1 6 1

net_cls 1 6 1

blkio 1 6 1

perf_event 1 6 1 |

Another way to check is the mount command :

| |

> mount | grep cgroup cgroup on /sys/fs/cgroup type cgroup (rw,relatime,perf_event,blkio,net_cls,freezer,devices,memory,cpuacct,cpu,cpuset,clone_children) |

You can see that memory is available here.[13]

7.5 I can't start my container, how could I debug ?

You can debug a container on boot by this way :

| |

lxc-start -n mycontainer -l debug -o debug.out |

Now you can look at debug.out and see what's wrong.

7.6 /usr/sbin/grub-probe: error: cannot find a device for / (is /dev mounted?)

I got dpkg error issue while I wanted to upgrade an LXC containers running on Debian because grub couldn't find /.

To resolve that issue, I needed to remove definitively grub and grub-pc. Then the system accepted to remove the kernel.

7.7 telinit: /run/initctl: No such file or directory

If you got his kind of error when you want to properly shutdown your LXC container, you need to create a device in your container:

| |

mknod -m 600 /var/lib/lxc/<container_name>/rootfs/run/initctl p |

And then add this in the container configuration file:

| |

lxc.cap.drop = sys_admin |

You can now shutdown it properly without any issue :-)

7.8 Some containers are loosing their IP addresse at boot

If you're experiencing issues with booting containers which are loosing their static IP at boot[14] there is a solution. The first thing to do to recover is:

| |

ifdown eth0 && ifup eth0 |

But is is a temporary solution. You in fact need to add in your LXC configuration file, the IP address with CIDR of your container:

| |

lxc.network.ipv4 = 192.168.0.50/24 lxc.network.ipv4.gateway = auto |

The automatic gateway setting is will in fact address to the container, the IP of the interface on which the container is attached. Then you have to modify your container network configuration and change static configuration to manual of eth0 interface. You should have something like this:

| |

allow-hotplug eth0 iface eth0 inet manual |

You're now ok, on next reboot the IP will be properly configured automatically by LXC and it will work anytime.

7.9 OpenVPN

To make openvpn working, you need to allow tun devices. In the LXC configuration, simply add this:

| |

lxc.cgroup.devices.allow = c 10:200 rwm |

And in the container, create it:

| |

mkdir /dev/net mknod /dev/net/tun c 10 200 chmod 0666 /dev/net/tun |

7.10 LXC inception or Docker in LXC

To get Docker in LXC or LXC in LXC working, you need to have some packages installed inside the LXC container:

| |

apt-get install cgroup-bin libcgroup1 cgroupfs-mount |

In the container configuration, you also need to have that line:

| |

lxc.mount.auto = cgroup |

Then it's ok :-)

7.11 LXC control device mapper

In Docker, you may want to use devicemapper driver. To get it working, you need to let your LXC container to control devicemappers. To do so, just add those 1 lines in your container configuration:

| |

lxc.cgroup.devices.allow = c 10:236 rwm lxc.cgroup.devices.allow = b 252:* rwm |

8 References

- ^ http://lxc.sourceforge.net/

- ^ http://pi.lastr.us/doku.php/virtualizacion:lxc:digitalocean-wheezy

- ^ http://philpep.org/blog/lxc-sur-debian-squeeze

- ^ https://help.ubuntu.com/lts/serverguide/lxc.html

- ^ http://lwn.net/Articles/273208/

- ^ http://wiki.rot13.org/rot13/index.cgi?action=display_html;page_name=lxc

- ^ https://www.kernel.org/doc/Documentation/scheduler/sched-design-CFS.txt

- ^ http://www.mattfischer.com/blog/?p=399

- ^ http://webcache.googleusercontent.com/search?q=cache:vWmLMNBRKIYJ:comments.gmane.org/gmane.linux.kernel.containers/23094+&cd=6&hl=fr&ct=clnk&gl=fr&client=firefox-a

- ^ http://www.jotschi.de/Uncategorized/2010/11/11/memory-allocation-test.html

- ^ https://bugs.launchpad.net/ubuntu/+source/lxc/+bug/986385

- ^ http://vger.kernel.org/netconf2009_slides/Network%20Control%20Group%20Whitepaper.odt

- ^ http://vin0x64.fr/2012/01/debian-limite-de-memoire-sur-conteneur-lxc/

- ^ http://serverfault.com/questions/571714/setting-up-bridged-lxc-containers-with-static-ips/586577#586577

http://www.pointroot.org/index.php/2013/05/12/installation-du-systeme-de-virtualisation-lxc-linux-containers-sur-debian-wheezy/

http://box.matto.nl/lxconlaptop.html

https://help.ubuntu.com/lts/serverguide/lxc.html

http://debian-handbook.info/browse/stable/sect.virtualization.html

http://www.fitzdsl.net/2012/12/installation-dun-conteneur-lxc-sur-dedibox/

http://freedomboxblog.nl/installing-lxc-dhcp-and-dns-on-my-freedombox/

http://containerops.org/2013/11/19/lxc-networking/