KVM : Mise en place de KVM

Contents

|

|

| Software version | 0.12.5 |

|---|---|

| Operating System | Debian 6 |

| Website | KVM Website |

| Last Update | 03/03/2015 |

| Others | |

1 Introduction

KVM (for Kernel-based Virtual Machine) is a full virtualization solution for Linux on x86 hardware containing virtualization extensions (Intel VT or AMD-V). It consists of a loadable kernel module, kvm.ko, that provides the core virtualization infrastructure and a processor specific module, kvm-intel.ko or kvm-amd.ko. KVM also requires a modified QEMU although work is underway to get the required changes upstream.

Using KVM, one can run multiple virtual machines running unmodified Linux or Windows images. Each virtual machine has private virtualized hardware: a network card, disk, graphics adapter, etc.

The kernel component of KVM is included in mainline Linux, as of 2.6.20.

KVM is open source software.

2 Install

To install and run KVM on Debian, follow these steps:

Run these commands as root:

| |

aptitude update aptitude install kvm qemu bridge-utils libvirt-bin virtinst |

- qemu is necessary, this is the base

- kvm is for full acceleration (need processor with vmx or svm technology)

- bridge-utils are tools for bridging VMs network

- libvirt is a managing solution for your VMs

Depending on if you are using an AMD or Intel processor, run one of these commands:

| |

modprobe kvm-amd |

or

| |

modprobe kvm-intel |

Then add it to /etc/modules :

| |

kvm-intel |

3 Configuration

3.1 System performances

To get as performances as possible, we'll need to set some specific options.

3.1.1 Disks

First, we'll set the disks algorithm to deadline in grub :

And update grub :

| |

update-grub |

Now, to reduces data copies and bus traffic, when you're using LVM partitions, disable the cache and use virtio drivers which are the fastest :

| |

virt-install ... --file=/dev/vg-name/lv-name,cache=none,if=virtio ... |

3.1.2 Memory

Then, we will enable KSM. Kernel Samepage Merging (KSM) is a feature of the Linux kernel introduced in the 2.6.32 kernel. KSM allows for an application to register with the kernel to have its pages merged with other processes that also register to have their pages merged. For KVM, the KSM mechanism allows for guest virtual machines to share pages with each other. In an environment where many of the guest operating systems are similar, this can result in significant memory savings.

To enable it, add this line :

You can see at anytime the status of KSM by :

| |

for i in /sys/kernel/mm/ksm/* ; do echo -n "$i: " ; cat $i ; done |

And in addition, we will disable swapiness to avoid having too much memory consumption. Add those lines in sysctl :

| |

# Swapiness vm.swappiness = 1 |

3.1.3 Network

For security and performances issues, you should disable ipv6 on bridged interfaces by adding those 3 lines :

| |

net.bridge.bridge-nf-call-ip6tables = 0 net.bridge.bridge-nf-call-iptables = 0 net.bridge.bridge-nf-call-arptables = 0 |

When you will create network interfaces, uses tap with virtio drivers :

| |

virt-install ... --network tap,bridge=br0,model=virtio ... |

3.1.4 Virtio

If you want to always enable VirtIO, to get maximum performances, load those modules :

| |

virtio_blk virtio_pci virtio_net |

3.2 Add user to group

- The installation of kvm created a new system group named kvm in /etc/group. You need to add the user accounts that will run kvm to this group (replace username with the user account name to add):

| |

adduser username kvm |

- For those who would like to use libvirt (recommanded), add your user to this group too :

| |

adduser username libvirt |

3.3 Storage

There are 2 types of solutions :

- Disk Image : Easier but slower

- Need LVM acknoledges but is faster and simpler to backup (LVM Snapshot)

3.3.1 Create Disks image

Create a virtual disk image (10 gigabytes in the example, but it is a sparse file and will only take as much space as is actually used, which is 0 at first, as can be seen with the du command: du vdisk.qcow, while ls -l vdisk.qcow shows the sparse file size):

| |

qemu-img create -f qcow2 disk0.qcow2 10G |

You can also use QED, which is faster than qcow2 :

| |

qemu-img create -f qed disk0.qed 10G |

3.3.2 Create LVM LV

To create a Logical Volume, simply :

| |

lvcreate -L 4G -n vm-name vg-name |

- -L : size of the logical volume

- -n : Name of the VM

- vg-name : replace by your Volume Group Name

3.4 Network Interfaces

3.4.1 Bridged configuration

Now modify your /etc/network/interfaces file to add bridged configuration :

br0 is replacing eth0 for bridging.

Here is another configuration with 2 network cards and 2 bridges :

3.4.2 VLAN Bridged configuration

And a last one with Vlans bridged (look at this documentation to enable it before) :

We will need to use etables (iptables for bridged interfaces). Install this :

| |

aptitude install ebtables |

Check you etables configuration :

| |

EBTABLES_LOAD_ON_START="yes" EBTABLES_SAVE_ON_STOP="yes" EBTABLES_SAVE_ON_RESTART="yes" |

And enable VLAN tagging on bridged interfaces :

| |

ebtables -t broute -A BROUTING -i eth0 -p 802_1Q -j DROP |

3.4.3 Nat configuration

Nat is the default configuration. But you may need to do some adjustements. Add the forwarding to sysctl :

| |

net.ipv4.ip_forward = 1 |

Check that the connecting is active :

| |

> virsh net-list --all Name State Autostart ----------------------------------------- default inactive no |

If it's not the case, then set the default network configuration :

| |

virsh net-define /etc/libvirt/qemu/networks/default.xml virsh net-autostart default virsh net-start default |

Then you should see it enable :

| |

> virsh net-list --all Name State Autostart ----------------------------------------- default active yes |

Edit the configuration to add your range of IP :

Now it's done, restart libvirt.

3.4.3.1 Iptables

You may need to configure iptables for example if you're on a dedicated box where the provider doesn't allow bridge configuration. Here is a working iptables configuration to permit incoming connexions to Nated guests :

4 Create a VM

4.1 New method (with libvirt)

- If you want to create a VM with disk image and bridged configuration :

- If you are using LVM and bridged configuration :

Now a configuration file for this VM has been created in /etc/libvirt/qemu.

- If you are using LVM and nated configuration :

4.2 Old method (without libvirt)

To make a clean installation of a Guest KVM, you can create a script for each VM you want to create.

here is a script exemple to launch a KVM using VNC for display instead of X11 display:

5 Manage VM

5.1 Manual method

After installation is complete, run it with:

| |

kvm -hda vdisk.img -m 384 |

Here is a good solution for *BSD guests :

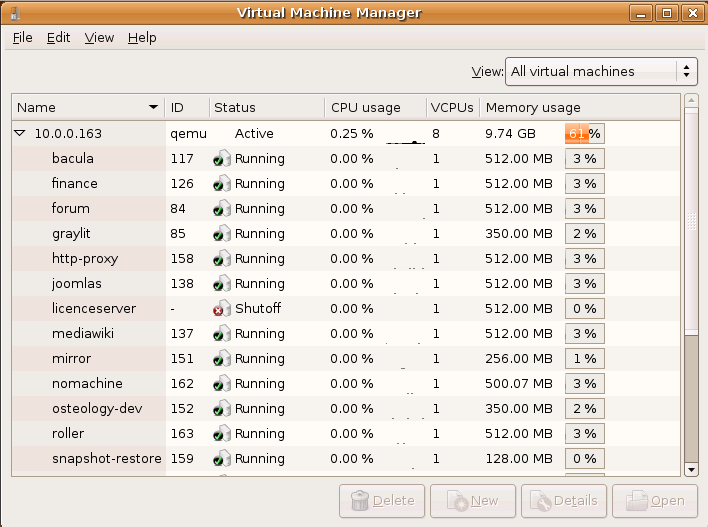

5.2 Virt Manager (GUI)

You can easily install locally or remotly this GUI to manage your VMs :

| |

apt-get install virt-manager virt-viewer |

Just connect remotly or locally and double click to launch a VM (and use it as vnc).

5.3 Virt Manager (Command line)

Use virsh command to manage your VMs. Here is a list of useless examples.

5.3.1 Start, stop, list

- List VMs :

| |

$ virsh list --all Id Name State ---------------------------------- 2 Mails running 4 Backups running 6 Web running |

| |

virsh start vm_name |

- Shutdown gracefully a VM :

| |

virsh shutdown vm_name |

- Force to shutdown the VM :

| |

virsh destroy vm_name |

5.3.2 Suspend, restore

- Suspend a VM :

| |

virsh suspend vm_name |

| |

virsh resume vm_name |

5.3.3 Delete

- Will delete configuration file (xml in /etc/libvirt/qemu/) :

| |

virsh undefine vm_name |

5.3.4 Backups, restore

- Save a VM :

| |

virsh save vm_name vm_name.dump |

- Restore a VM :

| |

virsh restore vm_name.dump |

5.3.5 Autostart

If you want to set a VM to start automatically on boot :

| |

virsh autostart <vm_name> |

6 Snapshots

There is a long list on how to manage snapshots (I suggest that link). There are 2 kinds of snapshot :

- Internal : snapshot are stored inside the image file

- External : snapshot are stored in an external image file

6.1 Create a snapshot

To create the snapshot :

| |

virsh snapshot-create-as --domain <vm_name> <snapshot_name> <snapshot_description> --disk-only --diskspec vda,snapshot=external,file=/mnt/vms/<snapshot_name>.qed --atomic |

- <vm_name> : set the vm domain name

- <snapshot_name> : the name of the snapshot (not as a file, but as it will be displayed on virsh)

- <snapshot_description> : a description of that snapshot

- vda : select the name of the VM device to backup

- <snapshot_name> : set the full path of the snapshot file (where it should be stored

Once that command launched, the base VM disk (not the snapshot) becomes read only and the snapshot is read/write. You could copy the base for the backup if you want.

You can check your disk currently used for write :

| |

> virsh domblklist pma_qedtest Target Source ------------------------------------------------ vda /mnt/vms/vms/pma_qedtest-snap.qed |

6.2 External snapshot

With the external snapshot, there are multiple way to create snapshots (blockcommit, blockpull...). They all got their pros and cons.

6.2.1 blockpull

This is the simplest and oldest way to merge the base to the snapshot:

| |

virsh blockpull --domain test --path /mnt/vms/<snapshot_name>.img |

When finished (look at iotop status), you can remove the base image and keep the snapshot.

6.2.2 blockcommit

The blockcommit, is my favorite way to create backups. The actual problem is on Debian 7, this is not present as virsh require a version upper or equal to 0.10.2 and it's only available on Debian unstable for the moment. Anyway, if you've got this version, here is how to do it.

Now we've got something like that :

[base(1)]--->[snapshot(2)]

If I now want to merge snapshot to base and got only one disk file :

[base(1)]<---[snapshot(2)] [base(2)]

you need to follow it :

| |

virsh blockcommit --domain <vm_name> vda --base /mnt/vms/<base_name>.qed --top /mnt/vms/<snapshot_name>.qed --wait --verbose |

- --base : the actual read only base disk

- --top : the snapshot disk file to merge in base

7 Others

7.1 Reload configuration

If you have made modifications on the xml file and wish reload it :

| |

virsh define /etc/libvirt/qemu/my_vm.xml |

7.2 Serial Port Connection

If you want to connect to serial port, you need to have configured your guest to enable it, then connect with virsh :

| |

virsh connect <hostname> |

7.3 Add a disk to an existing VM

This is very simple (my VM is called 'ed') :

| |

virsh attach-disk vmname /dev/mapper/vg-lv sdb |

- vmname : set the name of your VM

- /dev/mapper/vg-lv : set the disk

- sdb : the device name shown inside the VM

On Debian 6, there is a little bug. So the configuration needs to be reviewed. Replace the current configuration by the running one :

| |

vmname=ed virsh dumpxml $vmname > /etc/libvirt/qemu/$vmname.xml virsh shutdown $vmname |

Then edit the xml of your VM and change driver name from 'phy' to 'qemu' :

| |

[...]

<disk type='block' device='disk'>

<driver name='phy' type='raw'/>[...] |

And load it :

| |

virsh define /etc/libvirt/qemu/$vmname.xml |

Now you can launch your VM

7.4 Bind CPU/Cores to a VM

If you want to bind some CPU/Cores to a VM/Container, there is a solution called CPU Pining :-). First, look at the available cores on your server :

You can see there are 7 cores (called processor). In fact there are 4 cores with 2 thread each on this CPU. That's why there are 4 cores id and 8 detected cores.

So here is the list of the cores with their attached core :

core id 0 : processors 0 and 4 core id 1 : processors 1 and 5 core id 2 : processors 2 and 6 core id 3 : processors 3 and 7

Now, if I want on a VM a dedicated CPU with it's additional thread, I would prefer do 2 virtual CPU (vpcu) and bind the good core on it. So first, look at the current configuration :

| |

> virsh vcpuinfo vmname VCPU: 0 CPU: 6 State: running CPU time: 7,5s CPU Affinity: yyyyyyyy |

You can see there is only 1 vcpu. And all the cores of the CPU are used (count the number of 'y' in CPU Affinity, here 8). If we want the best performances, we need to add as many vcpu as we want of cores on a VM, you will see the advantage later... So let's add some cores :

| |

virsh setvcpus <vmname> <number_of_vcpus> |

So here for example, we set 4 vcpus. That mean the VM will see 4 cores ! Now, we're going to bind processor 0 and 4 on both vcpu ! Why ? Because if an application doesn't know how to multithread, it will use all the cores ! And if applications knows how to use multi cores, they will use it like that. So in any case, you will have good performances :-).

| |

virsh vcpupin vmname 0 2,6,3,7 virsh vcpupin vmname 1 2,6,3,7 virsh vcpupin vmname 2 2,6,3,7 virsh vcpupin vmname 3 2,6,3,7 |

So now I added 4 virtuals CPU (0 and 1) and added 2 cores (2 and 3) with their associated thread (6 and 7).

In Debian 6 version, it will be done on the fly, but won't be set definitely in the configuration. That's why you'll need to add those parameters (cpuset) in the XML of your VM :

| |

[...] <vcpu cpuset='2,6,3,7'>4</vcpu> [...] |

Do not forget to apply the new configuration :

| |

virsh define /etc/libvirt/qemu/vmname.xml |

We can now check the new configuration :

If you want to know more how cpusets works, follow that link.

8 Others

8.1 Convert a disk based VM on a LVM parition

You may have a couple of VM based on disk image like qcow2 and my want to convert them into LVM partition. Fortunatly, there is a solution ! First convert into your qcow into raw format :

| |

kvm-img convert disk0.qcow2 -O raw disk0.raw |

and then put the raw bits into the LVM volume :

| |

dd if=disk0.raw of=/dev/vg-name/lv-name bs=1M |

Now edit your xml file and make those changes :

Now reload your xml file of VM :

| |

virsh define /etc/libvirt/qemu/vm.xml |

And now you can start the VM :-)

8.2 Convert an LVM parition to a disk image

To convert an LVM to QED for example, launch that command and adapt it :

| |

qemu-img convert -O qed /dev/vg_name/lv_name/ /var/lib/libvirt/images/image_name.qed |

Then edit the VM libvirt configuration file like this :

| |

<disk type='file' device='disk'> <driver name='qemu' type='qed'/> <source file='/var/lib/libvirt/images/image_name.qed'/> |

Now reload your xml file of VM :

| |

virsh define /etc/libvirt/qemu/vm.xml |

And now you can start the VM :-)

8.3 Transfert a LVM disk based VM

If you need to transfer from one server to another a VM based on LVM, there is an easy way solution. You need to first stop the Virtual Machine to have consistency datas, then you can transfer them :

| |

dd if=/dev/vgname/lvname bs=1M | ssh root@new-server 'dd of=/dev/vgname/lvname bs=1M' |

Do not forget to transfer xml file configuration of the VM and adapt LVM disks name if needed. Then "virsh define" the new xml file.

8.4 Graphically access to VMs without Virt Manager

If you want to access thought your VMs without installing any manager, you can. First you have to be sure when you created your VM, you entered the --vnc option or when you launch it, you use this option.

If if it's not hte case and you're using libvirt, please add it to your wished VM :

| |

...

<graphics type='vnc' port='-1' autoport='yes' keymap='fr'/>

</devices>

</domain>

|

Now this is done, you need to change the default listening address of VNC on libvirt. By default, it's listening on 127.0.0.1. This is the most secure choice. However, you may have a secured LAN and wished to open it to anybody. Open so the qemu.conf and modify it to bind on you secure server IP address :

| |

vnc_listen = "192.168.0.1" |

If you need as well to activate secure VNC connections, please activate TLS in the same config file.

Then restart or reload libvirt-bin.

8.5 Suspend guests VMs on host shutdown

If your desktop hosts several VMs, it could be interesting to auto suspend them when you restart your computer for example. There is a service for that to make it easy. Simply edit libvirt-guests file configuration :

That's all :-)

9 FAQ

Read the manual page for more information :

| |

man kvm |

9.1 warning: could not open /dev/net/tun: no virtual network emulation

This happen when you want to charge the tun device and you don't have permissions. Simply run your kvm command with sudo.

- Run through the installer as usual

- On completion and reboot, the VM will perpetually reboot. "Stop" the VM.

- Start it up again, and immediately open a vnc console and select the Safe Boot from the options screen

- When prompted if you want to try and recover the boot block, say yes

- You should now have a Bourne terminal with your existing filesystem mounted on /a

- Run /a/usr/bin/bash (my preferred shell)

- export TERM=xterm

- vi /a/boot/grub/menu.1st (editing the bootloader on your mounted filesystem), to add "kernel/unix" to the kernel options for the non-safe-mode boot. Ex :

| |

... kernel$ /platform/i86pc/multiboot -B $ZFS-BOOTFS kernel/unix ... |

- Save the file and restart the VM - that's it!

9.3 error: Timed out during operation: cannot acquire state change lock

If you got this kind of error while starting a VM :

error: Failed to start domain <vm> error: Timed out during operation: cannot acquire state change lock

it's due to a bug and could be resolved like this :

| |

/etc/init.d/libvirt-bin stop rm -Rf /var/run/libvirt /etc/init.d/libvirt-bin start |

10 Ressources

https://help.ubuntu.com/community/KVM

Documentation for Speeding up QEMU with KVM and KQEMU

Documentation on using KVM on Ubuntu

Virtualization With KVM

KVM Guest Management With Virt-Manager

http://www.linux-kvm.org/page/Using_VirtIO_NIC

http://blog.loftninjas.org/2008/10/22/kvm-virtio-network-performance/

http://www.linux-kvm.org/page/Tuning_KVM

http://blog.bodhizazen.net/linux/improve-kvm-performance/

http://blog.allanglesit.com/2011/05/linux-kvm-vlan-tagging-for-guest-connectivity/

https://wiki.archlinux.org/index.php/KVM#Enabling_KSM

http://fr.gentoo-wiki.com/wiki/Libvirt

http://docs.redhat.com/docs/en-US/Red_Hat_Enterprise_Linux/6/html/Virtualization_Host_Configuration_and_Guest_Installation_Guide/chap-Virtualization_Host_Configuration_and_Guest_Installation_Guide-Network_Configuration.html#sect-Virtualization_Host_Configuration_and_Guest_Installation_Guide-Network_Configuration-Network_address_translation_NAT_with_libvirt

http://docs.fedoraproject.org/en-US/Fedora/13/html/Virtualization_Guide/ch25s06.html

http://berrange.com/posts/2010/02/12/controlling-guest-cpu-numa-affinity-in-libvirt-with-qemu-kvm-xen/

http://wiki.kartbuilding.net/index.php/KVM_Setup_on_Debian_Squeeze

http://kashyapc.fedorapeople.org/virt/lc-2012/snapshots-handout.html

http://fedoraproject.org/wiki/Features/Virt_Live_Snapshots

https://www.redhat.com/archives/libvirt-users/2012-September/msg00063.html