Fluentd: quickly search in your logs with Elasticsearch, Kibana and Fluentd

Contents

|

|

| Software version | 1.1.19-1 |

|---|---|

| Operating System | Debian 7 |

| Website | Fluentd Website |

| Last Update | 10/05/2014 |

| Others | Elasticsearch 1.1 Kibana 3.0.1 |

1 Introduction

Managing logs is not a complicated tasks with classical syslog systems (syslog-ng, rsyslog...). However, being able to search in them quickly when you have several gigabit of logs, with scalability, with a nice graphical interface etc...is not the same thing.

Hopefully today, tools that permit to do it very well exists, here are the list of tools that's we're going to use to achieve it:

- Elasticsearch[1]: Elasticsearch is a flexible and powerful open source, distributed, real-time search and analytics engine. Architected from the ground up for use in distributed environments where reliability and scalability are must haves, Elasticsearch gives you the ability to move easily beyond simple full-text search. Through its robust set of APIs and query DSLs, plus clients for the most popular programming languages, Elasticsearch delivers on the near limitless promises of search technology

- Kibana[2]: Kibana is Elasticsearch’s data visualization engine, allowing you to natively interact with all your data in Elasticsearch via custom dashboards. Kibana’s dynamic dashboard panels are savable, shareable and exportable, displaying changes to queries into Elasticsearch in real-time. You can perform data analysis in Kibana’s beautiful user interface using pre-designed dashboards or update these dashboards in real-time for on-the-fly data analysis.

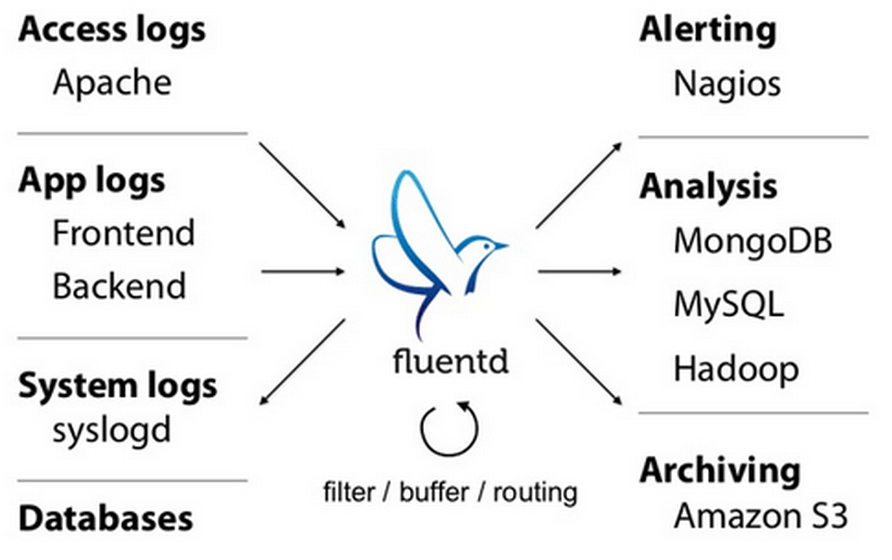

- Fluentd[3]: Fluentd is an open source data collector designed for processing data streams.

All those elements will be installed on the same machine to make it simpler at start. Fluentd is an alternative to Logstash. They both are data collector, however Fluentd permit to send logs to other destination:

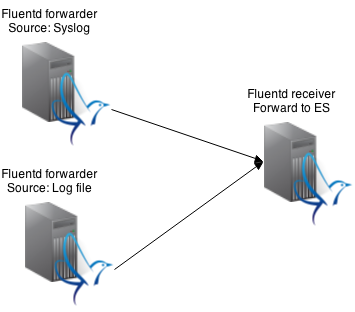

Here is what kind of infrastructure you can setup (no redundancy here, just a single instance):

To avoid dependencies issues, to make things simpler, we're going to use fluentd as forwarder here to transfer syslog and other kind of logs to another fluentd instance. On the last one, Elasticsearch and Kibana will be installed.

2 Installation

2.1 Elasticsearch

The first thing to put in place, is the backend that will store our logs. As we want the latest version, we're going to use the dedicated repository:

Then we're ready to install:

| |

aptitude update aptitude install elasticsearch openjdk-7-jre-headless openntpd |

To finish, configure the auto start of the service and run it:

| |

update-rc.d elasticsearch defaults 95 10 /etc/init.d/elasticsearch start |

2.2 kibana

Regarding Kibana, there is unfortunately no repository at the moment. So we're going to use the git repository to make it simpler. First of all, install a web server like Nginx:

| |

aptitude install nginx git |

Now clone the repository and use the latest version (here 3.0.1):

| |

cd /usr/share/nginx/www git clone https://github.com/elasticsearch/kibana.git cd kibana git checkout v3.0.1 |

You can get the list of all versions with git tag command.

You now need to configure Nginx to get it provided properly:

To finish for Kibana, edit the configuration file and adapt the elasticsearch line to your need:

Restart Nginx service to make the web interface available to http://<kibana_dns_name>:

2.3 Fluentd

Fluentd is now the last part that will permit to send syslog to another Fluentd or Elasticsearch. So this has to be done on all Fluentd forwarders or servers.

First of all, we'll adjust system parameters to be sure we won't be faced to performances issues due to it. First, edit the security limits and add those lines:

| |

root soft nofile 65536 root hard nofile 65536 * soft nofile 65536 * hard nofile 65536 |

Then we're going to add the sysctl tuning in that file:

| |

net.ipv4.tcp_tw_recycle = 1 net.ipv4.tcp_tw_reuse = 1 net.ipv4.ip_local_port_range = 10240 65535 |

And apply the new configuration:

| |

sysctl -p |

We're going to add the official repository:

However, during the time I'm writing it documentation, there are no Wheezy version available (squeeze only) and there is a missing dependancy on the libssl. We're going to get it from squeeze and install it:

| |

wget http://ftp.fr.debian.org/debian/pool/main/o/openssl/libssl0.9.8_0.9.8o-4squeeze14_amd64.deb dpkg -i libssl0.9.8_0.9.8o-4squeeze14_amd64.deb |

We're now ready to install Fluentd agent:

| |

aptitude update aptitude install td-agent openntpd mkdir /etc/td-agent/config.d |

Modify then the configuration to set the global configuration:

Restart td-agent service.

2.3.1 Elasticsearch plugin

By default, it doesn't know how to forward to Elasticsearch. So we will need to install a dedicated plugin for it on the server, not on the forwarders.. Here is how to install it:

3 Configuration

Here you will see how to configure multiple options of Fluentd. Choose the one you want to add to your Fluentd instances (can have several). Here is a good example of what is needed in this kind of configuration:

3.1 Forwarders

To make a Fluentd forwards data to a receiver, simply create that configuration file and set the Fluentd node to forward to:

| |

<match **>

type forward

<server>

host fluentd.deimos.fr

port 24224

</server>

</match> |

3.2 Receiver

If you want your node to be able to receive data from other Fluentd forwarders, you need to add this configuration:

| |

## built-in TCP input ## @see http://docs.fluentd.org/articles/in_forward <source> type forward </source> |

In that use case, you need to add this on the server role of Fluentd.

3.3 Rsyslog

By default, Debian is using Rsyslog and we're going to see here how to forward syslog to fluentd. First of all, on the Fluentd forwarders, create a syslog file containing the configuration as follow:

| |

<source> type syslog port 5140 bind 127.0.0.1 tag syslog </source> |

And restart td-agent service. It will create a listening port for Syslog.

Then simply add this line to redirect (in addition of the local files) syslog to Fluentd:

| |

*.* @127.0.0.1:5140 |

Restart Rsyslog service.

3.4 Log files

You may want to be able to log file as well. Here is a way to do it for a single access file from Nginx logs:

Then restart the td-agent service.

3.4.1 Nginx

The problem of the basic example above, is each elements are passed on a single line. That mean we can't filter accurately. Do do it, you will need to split with regex each fields and giving them a field name. You also need to specify the time and date format. Here is how to do it for Nginx:

It may be complicated to create a working regex the first time. That's why a website called Fluentar (http://fluentular.herokuapp.com) can helps you to create the format line.

3.5 Fluentd Elasticsearch

To send all incoming sources to Elasticsearch, simply create that configuration file:

| |

<match **> type elasticsearch logstash_format true host localhost port 9200 </match> |

Then restart the td-agent service.

4 Usage

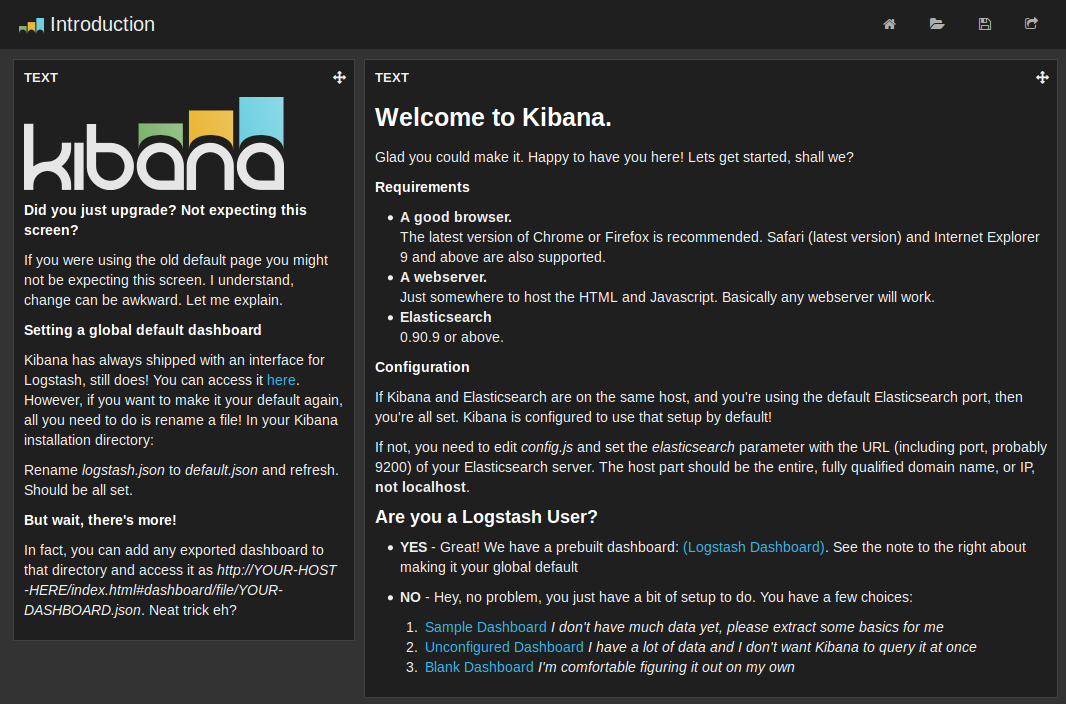

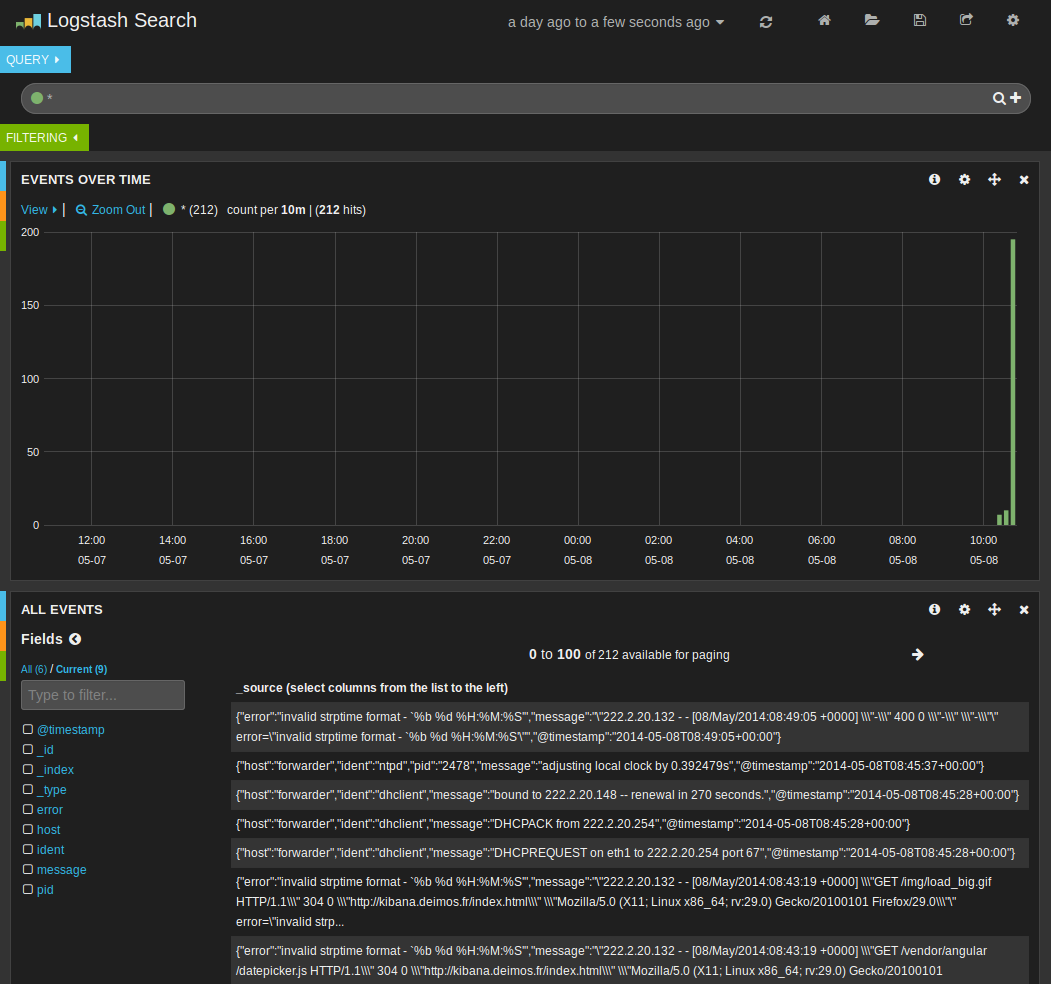

If you look at the web interface, you should have something like this:

You can now try to add other widget, look at the official documentation[4].

5 References

- ^ http://www.elasticsearch.org

- ^ http://www.elasticsearch.org/overview/kibana/

- ^ http://fluentd.org/

- ^ http://www.elasticsearch.org/guide/en/kibana/current/

- http://jasonwilder.com/blog/2013/11/19/fluentd-vs-logstash/

- http://repeatedly.github.io/2014/02/analyze-event-logs-using-fluentd-and-elasticsearch/

- http://www.devconsole.info/?p=917

- http://lifeandshell.com/install-elasticsearch-kibana-fluentd-opensource-splunk-with-syslog-clients/

- https://github.com/fluent/fluentd