Memory Caches

| Software version | Kernel 2.6.32+ |

| Operating System | Red Hat 6.3 Debian 7 |

| Website | Kernel Website |

Page Allocation

Delaying memory allocation when a process requests it is good for performance. Due to reference locality, most programs that request large memory allocations don't allocate all of it at once. For program memory allocation, it will be done gradually to avoid using more than necessary.

It's important to understand that there is also priority management based on who makes the request. For virtual memory allocation, for example, when the kernel makes a request, the memory is allocated immediately, whereas a user request will be handled gradually as needed. There are good reasons for these allocation choices. In fact, many RAM-intensive programs have sections that are rarely used. It's therefore unnecessary to load everything into memory if not everything is used. This helps avoid memory waste. A process whose memory allocation has been delayed during the last minute is referenced as being in demand for pagination.

It's possible to tune this allocation a bit for applications that typically allocate large blocks and then free the same memory. It also works well for applications that allocate a lot at once and then quit. You need to adjust the sysctl settings:

This helps reduce pagination request times; memory is only used for what it really needs, and it can put pressure on ZONE_NORMAL[^1].

Overcommit Management

It's advantageous for certain applications to let the kernel allocate more memory than the system can offer. This can be done with virtual memory. Using the vm.overcommit_memory parameter in sysctl, it's possible to ask the kernel to allow an application to make many small allocations:

To disable this feature:

It's also possible to use value 2. This allows overcommitting by an amount equal to the swap size + 50% of physical memory. The 50% can be changed via the ratio parameter:

To estimate the RAM size needed to avoid an OOM (Out Of Memory) condition for the current system workload:

Generally, overcommit is useful for scientific applications or those created in Fortran.

Slab Cache

The Slab cache contains pre-allocated memory pools that the kernel will use when it needs to provide space for different types of data structures. When these data structures map only very small pages or are so small that several of them fit into a single page, it's more efficient for the kernel to allocate pre-allocated memory from the Slab memory space. To get this information:

For a less detailed view:

There's also a utility that allows you to monitor this Slab cache in real time, you can use the slabtop command:

When a process references a file, the kernel creates and associates a 'dentry object' for each element in its pathname. For example, for /home/pmavro/.zshrc, the kernel will create 4 'dentry objects':

- /

- home

- pmavro

- zshrc

Each dentry object points to the inode associated with its file. To avoid reading from disk each time these same paths are used, the kernel uses the dentry cache where dentry objects are stored. For the same reasons, the kernel also caches information about inodes, which are therefore contained in the slab.

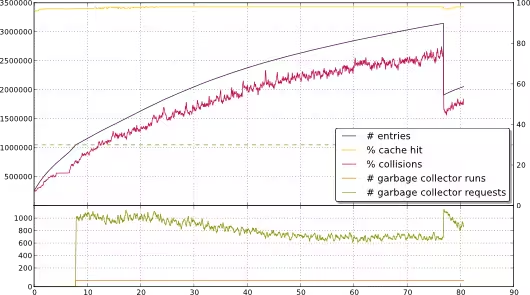

The ARP Cache

Many network performance problems can be due to the ARP cache being too small. By default, it's limited to 512 soft entries and 1024 hard entries at the Ulimits level. The soft limit becomes a hard limit after 5 seconds. When this limit is exceeded, the kernel performs garbage collection and scans the cache to purge entries to stay below this limit. This garbage collector can also lead to a complete cache deletion. Let's say your cache is limited to 1 entry but you're connecting from 2 remote machines. Each incoming and outgoing packet will cause garbage collection and reinsertion into the ARP cache. There will therefore be a permanent change in the cache. To give you an idea of what can happen on a system:

[^2]

[^2]

To see the ARP entries that map hardware addresses to protocol addresses:

Too many ARP entries in the cache put pressure on the ZONE_NORMAL. To list ARP entries, there are 2 solutions:

or

To clear the ARP cache:

You can make some ARP cache adjustments by specifying the soft limit, hard limit, and how often the garbage collector should run (in seconds):

There is also another option that allows you to set the minimum time of jiffies in user space to cached entries. There are 100 jiffies in user space in 1 second:

Page Cache

A very large percentage of pagination activity is due to IO. For reading from disk to memory for example, it forms page cache. Here are the cases of page cache verification for IO requests:

- Reading and writing files

- Reading and writing via block device files

- Access to memory-mapped files

- Access that swaps pages

- Reading directories

To see the page cache allocations, just look at the buffer caches:

It's possible to tune the page cache memory size:

And it's also possible to tune the arrival rate:

Anonymous Pages

In Linux, only certain types of pages are swapped. There's no need to swap text-type programs because they already exist on disk. Also, for memory that has been used to store files with modified content, the kernel will take the lead and write the data to the file it belongs to rather than to swap. Only pages that have no association with a file are written to swap.

The swap cache is used to keep track of pages that have previously been taken out of swap and haven't been re-swapped since. If the kernel swaps threads that need to swap a page later, if it finds an entry for this page in the swap cache, it's possible to swap without having to write to disk.

The statm file for each PID allows you to see anonymous pages (here PID 1):

- 2659: total program size

- 209: resident set size (RSS)

- 174: shared pages (from shared mappings)

- 9: text (code)

- 81: data + stack

This therefore contains the RSS and shared memory used by a process. But actually the RSS provided by the kernel consists of anonymous and shared pages, hence:

Anonymous Pages = RSS - Shared

SysV IPC

Another thing that consumes memory is the memory for IPC communications.

Semaphores allow 2 or more processes to coordinate access to shared resources.

Message Queues allow processes to coordinate for message exchanges. Shared memory regions allow processes to communicate by reading and writing to the same memory regions.

A process may wish to use one of these mechanisms but must make appropriate system calls to access the desired resources.

It's possible to put limits on these IPCs on SYSV systems. To see the current list:

Using /dev/shm can be a solution to significantly reduce the service time of certain applications. However, be careful when using this system as temporary storage space because it's in memory. There's also an 'ipcrm' command to force the deletion of shared memory segments. But generally, you'll never need to use this command.

It's possible to tune these values (present in /proc/sys/kernel) via sysctl:

- 250: maximum number of semaphores per semaphore array

- 32000: maximum number of semaphores allocated on the system side

- 32: maximum number of operations allocated per semaphore system call

- 128: number of semaphore arrays

If you want to modify them:

There are other interesting parameters (with their default values):

For more information, see the man page for proc(5).

Getting Memory Information

There are several solutions for retrieving memory sizes. The most well-known is the free command:

You can also get information from dmesg. As we've seen above, it's possible to get the total size of virtual space from meminfo:

To see the largest free chunk size:

For page tables:

For IO allocations, there's iomem:

References

[^1]: Memory Addressing and Allocation [^2]: IP4 route cache