Puppet: Configuration File Management Solution

Introduction

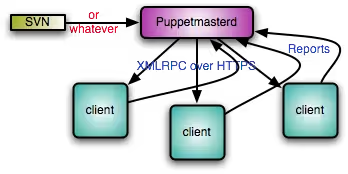

Puppet is a very practical application… It’s what you would find in companies with large volumes of servers, where the information system is “industrialized”. Puppet allows you to automate many administration tasks, such as software installation, services deployment, or file modifications. Puppet allows you to do this in a centralized way, which helps to better manage and control a large number of heterogeneous or homogeneous servers. Puppet works in Client/Server mode.

On each machine, a client will be installed, which will contact the PuppetMaster (the server) through HTTPS communication, and therefore SSL, with a provided PKI system. Puppet was developed in Ruby, making it multi-platform: BSD (free, macOS…), Linux (RedHat, Debian, SUSE…), Sun (OpenSolaris…) Reductive Labs, the company publishing Puppet, has developed a complementary product called Facter. This application lists specific elements of the managed systems, such as hostname, IP address, distribution, and environment variables that can be used in Puppet templates. As Puppet manages templates, you can quickly understand the usefulness of Facter. For example, if you manage a farm of mail servers that require configuration containing the machine name, a template combined with environment variables proves quite useful. In short, Puppet combined with Facter seems like a very interesting solution to simplify system administration.

Here is a diagram showing how Puppet works:

For Puppet configuration, if you want to use an IDE, there is Geppetto. I recommend it, as it will save you a lot of syntax troubles.

Documentation for previous versions is available here:

Puppet Hierarchy

Before going further, I’ve borrowed from the official site how the Puppet directory structure works:

All Puppet data files (modules, manifests, distributable files, etc) should be maintained in a Subversion or CVS repository (or your favorite Version Control System). The following hierarchy describes the layout one should use to arrange the files in a maintainable fashion:

/manifests/: this directory contains files and subdirectories that determine the manifest of individual systems but do not logically belong to any particular module. Generally, this directory is fairly thin and alternatives such as the use of LDAP or other external node tools can make the directory even thinner. This directory contains the following special files:- site.pp: first file that the Puppet Master parses when determining a server’s catalog. It imports all the underlying subdirectories and the other special files in this directory. It also defines any global defaults, such as package managers. See sample site.pp.

- templates.pp: defines all template classes. See also terminology:template classes. See sample templates.pp.

- nodes.pp: defines all the nodes if not using an external node tool. See sample nodes.pp.

/modules/{modulename}/: houses puppet modules in subdirectories with names matching that of the module name. This area defines the general building blocks of a server and contains modules such as for openssh, which will generally define classes openssh::client and openssh::server to setup the client and server respectively. The individual module directories contains subdirectories for manifests, distributable files, and templates. See modules organization, terminology:module./modules/user/: A special module that contains manifests for users. This module contains a special subclass called user::virtual which declares all the users that might be on a given system in a virtual way. The other subclasses in the user module are classes for logical groupings, such as user::unixadmins, which will realize the individual users to be included in that group. See also naming conventions, terminology:realize./services/: this is an additional modules area that is specified in the module path for the puppetmaster. However, instead of generic modules for individual services and bits of a server, this module area is used to model servers specific to enterprise level infrastructure services (core infrastructure services that your IT department provides, such as www, enterprise directory, file server, etc). Generally, these classes will include the modules out of /modules/ needed as part of the catalog (such as openssh::server, postfix, user::unixadmins, etc). The files section for these modules is used to distribute configuration files specific to the enterprise infrastructure service such as openldap schema files if the module were for the enterprise directory. To avoid namespace collision with the general modules, it is recommended that these modules/classes are prefixed with s_ (e.g. s_ldap for the enterprise directory server module)/clients/: similar to the /services/ module area, this area is used for modules related to modeling servers for external clients (departments outside your IT department). To avoid namespace collision, it is recommended that these modules/classes are prefixed with c_./notes/: this directory contains notes for reference by local administrators./plugins/: contains custom types programmed in Ruby. See also terminology:plugin-type./tools/: contains scripts useful to the maintenance of Puppet.

Installation

Puppet Server

The master version used must be the same as that of the client machines. It is highly recommended to use a version greater than or equal to 0.25.4 (which fixes numerous performance issues). For this, on Debian, you’ll need to install the version available in squeeze/lenny-backport or higher, and lock it to prevent an accidental upgrade from changing its version (use “pin locks”). Here we will opt for the version given on the official Puppet site.

For now, we need to configure the /etc/hosts file with the server IP:

...

192.168.0.93 puppet-prd.deimos.fr puppet

...

Note: Check that the puppetmaster’s clock (and client clocks as well) is up-to-date/synchronized. There can be an issue with certificates not being recognized/accepted if there is a time discrepancy (run dpkg-reconfigure tz-data).

Configure the official Puppet repository if you want the latest version, otherwise skip this step to install the version provided by your distribution:

wget http://apt.puppetlabs.com/puppetlabs-release-stable.deb

dpkg -i puppetlabs-release-stable.deb

And then update:

aptitude update

Then install puppetmaster:

aptitude install puppetmaster

You can verify that puppetmaster is installed correctly by running ‘facter’ (see if it returns something) or checking for SSL files (in /var/lib/puppet).

Web Server

Now we need to configure a web server on the same machine as the Puppet server (Puppet Master). Why? Simply because the default server is Webrick and it collapses if 10 nodes access it simultaneously.

The choice is yours between Passenger and Nginx. Passenger is the recommended solution since Puppet 3.

Passenger

If you’ve chosen to use Passenger as recommended by PuppetLab, you need to disable automatic daemon startup, as it would start a web server and conflict with Passenger:

# Defaults for puppetmaster - sourced by /etc/init.d/puppetmaster

# Start puppetmaster on boot? If you are using passenger, you should

# have this set to "no"

START=no

# Startup options

DAEMON_OPTS=""

# What port should the puppetmaster listen on (default: 8140).

PORT=8140

Now disable the service:

/etc/init.d/puppetmaster stop

Then, install Passenger:

aptitude install puppetmaster-passenger

You don’t need to do any configuration. Everything is provided by the official Puppet packages. In case you installed it without the official repository, here is the generated configuration:

# you probably want to tune these settings

PassengerHighPerformance on

PassengerMaxPoolSize 12

PassengerPoolIdleTime 1500

# PassengerMaxRequests 1000

PassengerStatThrottleRate 120

RackAutoDetect Off

RailsAutoDetect Off

Listen 8140

<VirtualHost *:8140>

SSLEngine on

SSLProtocol -ALL +SSLv3 +TLSv1

SSLCipherSuite ALL:!ADH:RC4+RSA:+HIGH:+MEDIUM:-LOW:-SSLv2:-EXP

SSLCertificateFile /var/lib/puppet/ssl/certs/puppet.deimos.lan.pem

SSLCertificateKeyFile /var/lib/puppet/ssl/private_keys/puppet.deimos.lan.pem

SSLCertificateChainFile /var/lib/puppet/ssl/certs/ca.pem

SSLCACertificateFile /var/lib/puppet/ssl/certs/ca.pem

# If Apache complains about invalid signatures on the CRL, you can try disabling

# CRL checking by commenting the next line, but this is not recommended.

SSLCARevocationFile /var/lib/puppet/ssl/ca/ca_crl.pem

SSLVerifyClient optional

SSLVerifyDepth 1

# The `ExportCertData` option is needed for agent certificate expiration warnings

SSLOptions +StdEnvVars +ExportCertData

# This header needs to be set if using a loadbalancer or proxy

RequestHeader unset X-Forwarded-For

RequestHeader set X-SSL-Subject %{SSL_CLIENT_S_DN}e

RequestHeader set X-Client-DN %{SSL_CLIENT_S_DN}e

RequestHeader set X-Client-Verify %{SSL_CLIENT_VERIFY}e

DocumentRoot /usr/share/puppet/rack/puppetmasterd/public/

RackBaseURI /

<Directory /usr/share/puppet/rack/puppetmasterd/>

Options None

AllowOverride None

Order allow,deny

allow from all

</Directory>

</VirtualHost>

NGINX and Mongrel

Installation

If you’ve chosen NGINX and Mongrel, you’ll need to start by installing them:

aptitude install nginx mongrel

Configuration

Modify the /etc/default/puppetmaster file:

# Defaults for puppetmaster - sourced by /etc/init.d/puppet

# Start puppet on boot?

START=yes

# Startup options

DAEMON_OPTS=""

# What server type to run

# Options:

# webrick (default, cannot handle more than ~30 nodes)

# mongrel (scales better than webrick because you can run

# multiple processes if you are getting

# connection-reset or End-of-file errors, switch to

# mongrel. Requires front-end web-proxy such as

# apache, nginx, or pound)

# See: http://reductivelabs.com/trac/puppet/wiki/UsingMongrel

SERVERTYPE=mongrel

# How many puppetmaster instances to start? Its pointless to set this

# higher than 1 if you are not using mongrel.

PUPPETMASTERS=4

# What port should the puppetmaster listen on (default: 8140). If

# PUPPETMASTERS is set to a number greater than 1, then the port for

# the first puppetmaster will be set to the port listed below, and

# further instances will be incremented by one

#

# NOTE: if you are using mongrel, then you will need to have a

# front-end web-proxy (such as apache, nginx, pound) that takes

# incoming requests on the port your clients are connecting to

# (default is: 8140), and then passes them off to the mongrel

# processes. In this case it is recommended to run your web-proxy on

# port 8140 and change the below number to something else, such as

# 18140.

PORT=18140

After (re-)starting the daemon, you should be able to see the attached sockets:

> netstat -pvltpn

Connexions Internet actives (seulement serveurs)

Proto Recv-Q Send-Q Adresse locale Adresse distante Etat PID/Program name

tcp 0 0 0.0.0.0:41736 0.0.0.0:* LISTEN 2029/rpc.statd

tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 2018/portmap

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 2333/sshd

tcp 0 0 127.0.0.1:18140 0.0.0.0:* LISTEN 10059/ruby tcp 0 0 127.0.0.1:18141 0.0.0.0:* LISTEN 10082/ruby tcp 0 0 127.0.0.1:18142 0.0.0.0:* LISTEN 10104/ruby tcp 0 0 127.0.0.1:18143 0.0.0.0:* LISTEN 10126/ruby

tcp6 0 0 :::22 :::* LISTEN 2333/sshd

Add the following lines to the /etc/puppet/puppet.conf file:

[main]

logdir=/var/log/puppet

vardir=/var/lib/puppet

ssldir=/var/lib/puppet/ssl

rundir=/var/run/puppet

factpath=$vardir/lib/facter

templatedir=$confdir/templates

pluginsync = true

[master]

# These are needed when the puppetmaster is run by passenger

# and can safely be removed if webrick is used.

ssl_client_header = HTTP_X_SSL_SUBJECT

ssl_client_verify_header = SSL_CLIENT_VERIFY

report = true

[agent]

server=puppet-srv.deimos.fr

Modify the following configuration in /etc/nginx.conf:

user www-data;

worker_processes 4;

error_log /var/log/nginx/error.log;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

# multi_accept on;

}

http {

#include /etc/nginx/mime.types;

default_type application/octet-stream;

access_log /var/log/nginx/access.log;

sendfile on; tcp_nopush on;

# Look at TLB size in /proc/cpuinfo (Linux) for the 4k pagesize

large_client_header_buffers 16 4k;

proxy_buffers 128 4k;

#keepalive_timeout 0;

keepalive_timeout 65;

tcp_nodelay on;

gzip on;

gzip_disable "MSIE [1-6]\.(?!.*SV1)";

include /etc/nginx/conf.d/*.conf;

include /etc/nginx/sites-enabled/*;

}

And add this configuration for puppet:

upstream puppet-prd.deimos.fr {

server 127.0.0.1:18140;

server 127.0.0.1:18141;

server 127.0.0.1:18142;

server 127.0.0.1:18143;

}

server {

listen 8140;

ssl on;

ssl_certificate /var/lib/puppet/ssl/certs/puppet-prd.pem;

ssl_certificate_key /var/lib/puppet/ssl/private_keys/puppet-prd.pem; ssl_client_certificate /var/lib/puppet/ssl/ca/ca_crt.pem;

ssl_ciphers SSLv2:-LOW:-EXPORT:RC4+RSA;

ssl_session_cache shared:SSL:8m;

ssl_session_timeout 5m;

ssl_verify_client optional;

# obey to the Puppet CRL

ssl_crl /var/lib/puppet/ssl/ca/ca_crl.pem;

root /var/empty;

access_log /var/log/nginx/access-8140.log;

#rewrite_log /var/log/nginx/rewrite-8140.log;

# Variables

# $ssl_cipher returns the line of those utilized it is cipher for established SSL-connection

# $ssl_client_serial returns the series number of client certificate for established SSL-connection

# $ssl_client_s_dn returns line subject DN of client certificate for established SSL-connection

# $ssl_client_i_dn returns line issuer DN of client certificate for established SSL-connection

# $ssl_protocol returns the protocol of established SSL-connection

location / {

proxy_pass http://puppet-prd.deimos.fr; proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Client_DN $ssl_client_s_dn;

proxy_set_header X-Client-Verify $ssl_client_verify;

proxy_set_header X-SSL-Subject $ssl_client_s_dn;

proxy_set_header X-SSL-Issuer $ssl_client_i_dn;

proxy_read_timeout 65;

}

}

Then create the symbolic link to apply the configuration:

cd /etc/nginx/sites-available

ln -s /etc/nginx/sites-enabled/puppetmaster .

And then restart the Nginx server.

To verify that the daemons are running correctly, you should have the following sockets open:

> netstat -vlptn

Connexions Internet actives (seulement serveurs)

Proto Recv-Q Send-Q Adresse locale Adresse distante Etat PID/Program name

tcp 0 0 0.0.0.0:41736 0.0.0.0:* LISTEN 2029/rpc.statd

tcp 0 0 0.0.0.0:8140 0.0.0.0:* LISTEN 10293/nginx

tcp 0 0 0.0.0.0:8141 0.0.0.0:* LISTEN 10293/nginx

tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 2018/portmap

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 2333/sshd

tcp 0 0 127.0.0.1:18140 0.0.0.0:* LISTEN 10059/ruby

tcp 0 0 127.0.0.1:18141 0.0.0.0:* LISTEN 10082/ruby

tcp 0 0 127.0.0.1:18142 0.0.0.0:* LISTEN 10104/ruby

tcp 0 0 127.0.0.1:18143 0.0.0.0:* LISTEN 10126/ruby

tcp6 0 0 :::22 :::* LISTEN 2333/sshd

Puppet Clients

For clients, it’s also simple. But first add the server line to the hosts file:

...

192.168.0.93 puppet-prd.deimos.fr puppet

This is not mandatory if your DNS names are correctly configured.

Debian

If you want to use the latest version:

wget http://apt.puppetlabs.com/puppetlabs-release-stable.deb

dpkg -i puppetlabs-release-stable.deb

And then update:

aptitude update

Verify that the /etc/hosts file contains the hostname of the client machine, then install puppet:

aptitude install puppet

Red Hat

Just like Debian, there is a yum repo on Red Hat and we’ll install a package that will configure it for us:

rpm -ivh http://yum.puppetlabs.com/el/6/products/x86_64/puppetlabs-release-6-6.noarch.rpm

Then install:

yum install puppet

Solaris

The stable Puppet client in blastwave is too old (0.23). Therefore, Puppet (and Facter) will need to be installed through Ruby’s standard manager: gem. To do this, you’ll first need to install ruby with the following command:

pkg-get -i ruby

bash\npkg-get -r rubygems\nThen install a more up-to-date version from the sources:

wget http://rubyforge.org/frs/download.php/45905/rubygems-1.3.1.tgz

gzcat rubygems-1.3.1.tgz | tar -xf -

cd rubygems-1.3.1

ruby setup.rb

gem --version

Install puppet with the command and the -p argument if you have a proxy:

gem install puppet --version '0.25.4' -p http://proxy:3128/

You need to modify/add some commands that are not available by default on Solaris for Puppet to work better:

- Create a link for uname and puppetd:

ln -s /usr/bin/uname /usr/bin/

ln -s /opt/csw/bin/puppetd /usr/bin/

- Create a script called /usr/bin/dnsdomainname:

#!/usr/bin/bash

DOMAIN="`/usr/bin/domainname 2> /dev/null`"

if [ ! -z "$DOMAIN" ]; then

echo $DOMAIN | sed 's/^[^.]*.//'

fi

chmod 755 /usr/bin/dnsdomainname

Then, the procedure is the same as for other OSes, that is, modify /etc/hosts to include puppet-prd.deimos.fr, and run:

puppetd --verbose --no-daemon --test --server puppet-prd.deimos.fr

At this point, if it doesn’t work, it’s simply because you need to modify the configuration (puppet.conf) of your client.

Configuration

Server

For the server part, here’s how the directory structure is organized (in /etc/puppet):

.

|-- auth.conf

|-- autosign.conf

|-- fileserver.conf

|-- manifests

| |-- common.pp

| |-- modules.pp

| `-- site.pp

|-- modules

|-- puppet.conf

`-- templates

auth.conf

This is where we set all the permissions:

# This is an example auth.conf file, it mimics the puppetmasterd defaults

#

# The ACL are checked in order of appearance in this file.

#

# Supported syntax:

# This file supports two different syntax depending on how

# you want to express the ACL.

#

# Path syntax (the one used below):

# ---------------------------------

# path /path/to/resource

# [environment envlist]

# [method methodlist]

# [auth[enthicated] {yes|no|on|off|any}]

# allow [host|ip|*]

# deny [host|ip]

#

# The path is matched as a prefix. That is /file match at

# the same time /file_metadat and /file_content.

#

# Regex syntax:

# -------------

# This one is differenciated from the path one by a '~'

#

# path ~ regex

# [environment envlist]

# [method methodlist]

# [auth[enthicated] {yes|no|on|off|any}]

# allow [host|ip|*]

# deny [host|ip]

#

# The regex syntax is the same as ruby ones.

#

# Ex:

# path ~ .pp$

# will match every resource ending in .pp (manifests files for instance)

#

# path ~ ^/path/to/resource

# is essentially equivalent to path /path/to/resource

#

# environment:: restrict an ACL to a specific set of environments

# method:: restrict an ACL to a specific set of methods

# auth:: restrict an ACL to an authenticated or unauthenticated request

# the default when unspecified is to restrict the ACL to authenticated requests

# (ie exactly as if auth yes was present).

#

### Authenticated ACL - those applies only when the client

### has a valid certificate and is thus authenticated

# allow nodes to retrieve their own catalog (ie their configuration)

path ~ ^/catalog/([^/]+)$

method find

allow $1

# allow nodes to retrieve their own node definition

path ~ ^/node/([^/]+)$

method find

allow $1

# allow all nodes to access the certificates services

path /certificate_revocation_list/ca

method find

allow *

# allow all nodes to store their reports

path /report

method save

allow *

# inconditionnally allow access to all files services

# which means in practice that fileserver.conf will

# still be used

path /file

allow *

### Unauthenticated ACL, for clients for which the current master doesn't

### have a valid certificate; we allow authenticated users, too, because

### there isn't a great harm in letting that request through.

# allow access to the master CA

path /certificate/ca

method find

allow *

path /certificate/

method find

allow *

path /certificate_request

method find, save

allow *

# this one is not stricly necessary, but it has the merit

# to show the default policy which is deny everything else

path /

auth any

allow *.deimos.fr

In case you encounter access problems, for insecure but simple testing, add this line at the end of your configuration file:

allow *

autosign.conf

You can auto-sign certain certificates to save time. This can be a bit dangerous, but if your node filtering is done correctly behind, no worries :-)

*.deimos.fr

Here I’ll auto-sign all my nodes with the deimos.fr domain.

fileserver.conf

Give permissions for client machines in the /etc/puppet/fileserver.conf file:

# This file consists of arbitrarily named sections/modules

# defining where files are served from and to whom

# Define a section 'files'

# Adapt the allow/deny settings to your needs. Order

# for allow/deny does not matter, allow always takes precedence

# over deny

[files]

path /etc/puppet/files

allow *.deimos.fr

# allow *.example.com

# deny *.evil.example.com

# allow 192.168.0.0/24

[plugins]

allow *.deimos.fr

# allow *.example.com

# deny *.evil.example.com

# allow 192.168.0.0/24

manifests

Let’s create the missing files:

touch /etc/puppet/manifests/{common.pp,modules.pp,site.pp}

common.pp

The common.pp is empty, but you can insert things that will be taken as global configuration.

modules.pp

Then I’ll define my base module(s) here. For example, in my future configuration I’ll declare a “base” module that will contain everything that any machine that’s part of puppet will inherit:

import "base"

site.pp

We ask to load all modules present in the modules folder:

# /etc/puppet/manifests/site.pp

import "common.pp"

# The filebucket option allows for file backups to the server

filebucket { main: server => 'puppet-prod-nux.deimos.fr' }

# Backing up all files and ignore vcs files/folders

File {

backup => '.puppet-bak',

ignore => ['.svn', '.git', 'CVS' ]

}

# Default global path

Exec { path => "/usr/bin:/usr/sbin/:/bin:/sbin" }

# Import base module

import "modules.pp"

Here I tell it to use the filebucket on the puppet server and to rename files that will be replaced by puppet to

All this configuration is specific to the puppet server, so it’s global. Anything we put in it can be inherited. Restart the puppetmaster to make sure that the server-side changes have been properly taken into account.

puppet.conf

I intentionally skipped the modules folder as it’s the big piece of puppet and will be given special attention later in this article.

So we’re going to move on to the puppet.conf configuration file that you probably already configured during the mongrel/nginx installation…:

[main]

logdir=/var/log/puppet

vardir=/var/lib/puppet

ssldir=/var/lib/puppet/ssl

rundir=/var/run/puppet

factpath=$vardir/lib/facter

templatedir=$confdir/templates

pluginsync = true

[master]

# These are needed when the puppetmaster is run by passenger

# and can safely be removed if webrick is used.

ssl_client_header = HTTP_X_SSL_SUBJECT

ssl_client_verify_header = SSL_CLIENT_VERIFY

report = true

[agent]

server=puppet-srv.deimos.fr

For the templates folder, I have nothing in it.

Client

Each client must have its entry in the DNS server (just like the server)!

puppet.conf

Debian / Red Hat

The configuration file must contain the server address:

[main]

# The Puppet log directory.

# The default value is '$vardir/log'.

logdir = /var/log/puppet

# Where Puppet PID files are kept.

# The default value is '$vardir/run'.

rundir = /var/run/puppet

# Where SSL certificates are kept.

# The default value is '$confdir/ssl'.

ssldir = $vardir/ssl

# Puppet master server

server = puppet-prd.deimos.fr

# Add custom facts

pluginsync = true

pluginsource = puppet://$server/plugins

factpath = /var/lib/puppet/lib/facter

[agent]

# The file in which puppetd stores a list of the classes

# associated with the retrieved configuratiion. Can be loaded in

# the separate ``puppet`` executable using the ``--loadclasses``

# option.

# The default value is '$confdir/classes.txt'.

classfile = $vardir/classes.txt

# Where puppetd caches the local configuration. An

# extension indicating the cache format is added automatically.

# The default value is '$confdir/localconfig'.

localconfig = $vardir/localconfig

# Reporting

report = true

Solaris

For Solaris, the configuration needed quite a bit of adaptation:

[main]

logdir=/var/log/puppet

vardir=/var/opt/csw/puppet

rundir=/var/run/puppet

# ssldir=/var/lib/puppet/ssl

ssldir=/etc/puppet/ssl

# Where 3rd party plugins and modules are installed

libdir = $vardir/lib

templatedir=$vardir/templates

# Turn plug-in synchronization on.

pluginsync = true

pluginsource = puppet://$server/plugins

factpath = /var/puppet/lib/facter

[puppetd]

report=true

server=puppet-prd.deimos.fr

# certname=puppet-prd.deimos.fr

# enable the marshal config format

config_format=marshal

# different run-interval, default= 30min

# e.g. run puppetd every 4 hours = 14400

runinterval = 14400

logdest=/var/log/puppet/puppet.log

The Language

Before starting to create modules, we need to know a bit more about the syntax/language used for puppet. Its syntax is close to ruby and it’s even possible to write complete modules in ruby. I’ll explain here some techniques/possibilities to allow you to create advanced modules later.

We’ll also use types, I won’t go into detail on these, because the doc on the site is clear enough: http://docs.puppetlabs.com/references/latest/type.html

Functions

Here’s how to define a function with multiple arguments:

define network_config( $ip, $netmask, $gateway ) {

notify {"$ip, $netmask, $gateway":}

}

network_config { "eth0":

ip => '192.168.0.1',

netmask => '255.255.255.0',

gateway => '192.168.0.254,

}

Installing packages

We’ll see here how to install a package, then use a function to easily install many more. For a single package, it’s simple:

# Install kexec-tools

package { 'kexec-tools':

ensure => 'installed'

}

Here we’re asking for a package (kexec-tool) to be installed. If we want several to be installed, we’ll need to create an array:

# Install kexec-tools

package {

[

'kexec-tools',

'package2',

'pacakge3'

]:

ensure => 'installed'

}

This is quite practical and arrays often work this way for pretty much any type used. We can also create a function for this in which we’ll send each element of the array:

# Validate that pacakges are installed

define packages_install () {

notice("Installation of ${name} package")

package {

"${name}":

ensure => 'installed'

}

}

# Set all custom packages (not embended in distribution) that need to be installed

packages_install

{ [

'puppet',

'tmux'

]: }

Some of you will say that for this specific case, it’s pointless, since the method above allows it to be done while others will find this method more elegant and easier to comprehend for a novice coming to puppet. The function name used is packages_install, the $name variable is always the first element sent to a function, which corresponds here to each element in our array.

Including / Excluding modules

You’ve just seen functions, we’ll push them a bit further with a solution to include and exclude the loading of certain modules. Here, I have a functions file:

# Load or not modules (include/exclude)

define include_modules () {

if ($exclude_modules == undef) or !($name in $exclude_modules) {

include $name

}

}

Here I have another file representing the roles of my servers (we’ll get to that later):

# Load modules

$minimal_modules =

[

'puppet',

'resolvconf',

'packages_defaults',

'configurations_defaults'

]

include_modules{ $minimal_modules: }

And finally a file containing the name of a server in which I’m going to ask it to load certain modules, but also exclude some:

node 'srv.deimos.fr' {

$exclude_modules = [ 'resolvconf' ]

include base::minimal

}

Here I use an array ‘$exclude_modules’ (with a single element, but you can add several separated by commas), which will allow me to specify which modules to exclude. Because by the next line it will load everything it will need through the include_modules function.

Templates

When you write manifests, you call a directive named ‘File’ when you want to send a file to a server. But if the content of that file needs to change based on certain parameters (name, ip, timezone, domain…), then you need to use templates! And that’s where it gets interesting as it’s possible to script within a template to generate its content. Templates use a language very close to ruby.

In a template, the following syntax is used:

- For facts, it’s simple, you need to prefix the variable with a “@”. For example <%= fqdn %> becomes <%= @fqdn %>.

- For your variables, if it’s declared in the manifest that calls the template, also use “@”.

- My variable myvar defined in the manifest that calls this template has the value <%= myvar %>.

If you want to access a variable defined outside the current manifest, outside the local scope, use the scope.lookupvar function:

<%= scope.lookupvar('common::config::myvar') %>

You can validate your template via:

erb -P -x -T '-' mytemplate.erb | ruby -c

Here’s an example with OpenSSH so you understand. I’ve taken the configuration that will vary according to certain parameters:

# Package generated configuration file

# See the sshd(8) manpage for details

# What ports, IPs and protocols we listen for

<% ssh_default_port.each do |val| -%>

Port <%= val -%>

<% end -%>

# Use these options to restrict which interfaces/protocols sshd will bind to

#ListenAddress ::

#ListenAddress 0.0.0.0

Protocol 2

# HostKeys for protocol version 2

HostKey /etc/ssh/ssh_host_rsa_key

HostKey /etc/ssh/ssh_host_dsa_key

#Privilege Separation is turned on for security

UsePrivilegeSeparation yes

# Lifetime and size of ephemeral version 1 server key

KeyRegenerationInterval 3600

ServerKeyBits 768

# Logging

SyslogFacility AUTH

LogLevel INFO

# Authentication:

LoginGraceTime 120

PermitRootLogin yes

StrictModes yes

RSAAuthentication yes

PubkeyAuthentication yes

#AuthorizedKeysFile %h/.ssh/authorized_keys

# Don't read the user's ~/.rhosts and ~/.shosts files

IgnoreRhosts yes

# For this to work you will also need host keys in /etc/ssh_known_hosts

RhostsRSAAuthentication no

# similar for protocol version 2

HostbasedAuthentication no

# Uncomment if you don't trust ~/.ssh/known_hosts for RhostsRSAAuthentication

#IgnoreUserKnownHosts yes

# To enable empty passwords, change to yes (NOT RECOMMENDED)

PermitEmptyPasswords no

# Change to yes to enable challenge-response passwords (beware issues with

# some PAM modules and threads)

ChallengeResponseAuthentication no

# Change to no to disable tunnelled clear text passwords

#PasswordAuthentication yes

# Kerberos options

#KerberosAuthentication no

#KerberosGetAFSToken no

#KerberosOrLocalPasswd yes

#KerberosTicketCleanup yes

# GSSAPI options

#GSSAPIAuthentication no

#GSSAPICleanupCredentials yes

X11Forwarding yes

X11DisplayOffset 10

PrintMotd no

PrintLastLog yes

TCPKeepAlive yes

#UseLogin no

#MaxStartups 10:30:60

#Banner /etc/issue.net

# Allow client to pass locale environment variables

AcceptEnv LANG LC_*

Subsystem sftp /usr/lib/openssh/sftp-server

UsePAM yes

# AllowUsers <%= ssh_allowed_users %>

Here we’re using two types of template usage. A multi-line repetition, and the other with a simple variable replacement:

- ssh_default_port.each do: allows us to put a line of “Port num_port” for each specified port

- ssh_allowed_users: allows us to give a list of users

These variables are usually declared either in the node part or in the global configuration. We’ve just seen how to put a variable or a loop in a template, but know that it’s also possible to use if statements! In short, a complete language exists and allows you to modulate a file as you wish.

These methods prove simple and very effective. Small subtlety:

- -%>: When a line ends like this, there won’t be a line break thanks to the - at the end.

- %>: There will be a line break here.

Inline-templates

This is a small subtlety that may seem unnecessary, but is actually very useful for executing small methods within a manifest! Take for example the ‘split’ function that exists in puppet today, it would seem normal that the ‘join’ function exists, right? Well, no… at least not in the current version at the time of writing this (2.7.18). So I can use in the same way as templates code in my manifests, see for yourself:

$ldap_servers = [ '192.168.0.1', '192.168.0.2', '127.0.0.1' ]

$comma_ldap_servers = inline_template("<%= (ldap_servers).join(',') %>")

- $ldap_servers: this is a simple array with my list of LDAP servers

- $comma_ldap_servers: we use the inline_template function, which will call the join function, pass it the ldap_servers array and join the content with commas.

I would finally have:

$comma_ldap_servers = '192.168.0.1,192.168.0.2,127.0.0.1'

Facters

“Facts” are scripts (see /usr/lib/ruby/1.8/facter for standard facts) that allow building dynamic variables, which change depending on the environment in which they are executed. For example, we could define a “fact” that determines if we are on a “cluster” type machine based on the presence or absence of a file:

# is_cluster.rb

Facter.add("is_cluster") do

setcode do

FileTest.exists?("/etc/cluster/nodeid")

end

end

You can also use functions that allow you to directly use facter-type functions in templates (downcase or upcase to change case):

#

# Config file for collectd(1).

# Please read collectd.conf(5) for a list of options.

# http://collectd.org/

#

Hostname <%= hostname.downcase %>

FQDNLookup true

Be careful, if you want to test the fact on the destination machine, don’t forget to specify the path where the facts are located on the machine:

export FACTERLIB=/var/lib/puppet/lib/facter

or for Solaris:

export FACTERLIB=/var/opt/csw/puppet/lib/facter

To see the list of facts currently on the system, simply type the facter command:

> facter

facterversion => 1.5.7

hardwareisa => i386

hardwaremodel => i86pc

hostname => PA-OFC-SRV-UAT-2

hostnameldap => PA-OFC-SRV-UAT

id => root

interfaces => lo0,e1000g0,e1000g0_1,e1000g0_2,e1000g1,e1000g2,e1000g3,clprivnet0

...

See http://docs.puppetlabs.com/guides/custom_facts.html for more details.

Dynamic Information

It’s possible to use server-side scripts and retrieve their content in a variable. Here’s an example:

#!/usr/bin/ruby

require 'open-uri'

page = open("http://www.puppetlabs.com/misc/download-options/").read

print page.match(/stable version is ([\d\.]*)/)[1]

And in the manifest:

$latestversion = generate("/usr/bin/latest_puppet_version.rb")

notify { "The latest stable Puppet version is ${latestversion}. You're using ${puppetversion}.": }

Magical, isn’t it? :-). Know that it’s even possible to pass arguments with a comma between each one!!!

Parsers

Parsers are the creation of special functions usable in manifests (server-side). For example, I created a parser that will allow me to do a reverse DNS lookup:

# Dns2IP for Puppet

# Made by Pierre Mavro

# Does a DNS lookup and returns an array of strings of the results

# Usage : need to send one string dns servers separated by comma. The return will be the same

require 'resolv'

module Puppet::Parser::Functions

newfunction(:dns2ip, :type => :rvalue) do |arguments|

result = [ ]

# Split comma sperated list in array

dns_array = arguments[0].split(',')

# Push each DNS/IP address in result array

dns_array.each do |dns_name|

result.push(Resolv.new.getaddresses(dns_name))

end

# Join array with comma

dns_list = result.join(',')

# Delete last comma if exist

good_dns_list = dns_list.gsub(/,$/, '')

return good_dns_list

end

end

We’ll be able to create this variable and then insert it into our manifests:

$comma_ldap_servers = 'ldap1.deimos.fr,ldap2.deimos.fr,127.0.0.1'

$ip_ldap_servers = dns2ip("${comma_ldap_servers}")

Here I send a list of LDAP servers and their IP addresses will be returned to me. Now you understand that it’s a call, a bit like inline_templates, but much more powerful.

Ruby in your manifests

It’s entirely possible to write Ruby in your manifests. See for yourself:

notice( "I am running on node %s" % scope.lookupvar("fqdn") )

This looks a lot like sprintf.

Adding a Ruby variable in manifests

If we want to retrieve the current time in a manifest, for example:

require 'time'

scope.setvar("now", Time.now)

notice( "Here is the current time : %s" % scope.lookupvar("now") )

Classes

You can use classes with arguments like this:

class mysql( $package, $socket, $port = "3306" ) {

…

}

class { "mysql":

package => "percona-sql-server-5.0",

socket => "/var/run/mysqld/mysqld.sock",

port => "3306",

}

Using hash tables

Just like arrays, it’s also possible to use hash tables, look at this example:

$interface = {

name => 'eth0',

address => '192.168.0.1'

}

notice("Interface ${interface[name]} has address ${interface[address]}")

Regex

It’s possible to use regex and retrieve patterns:

$input = "What a great tool"

if $input =~ /What a (\w+) tool/ {

notice("You said the tool is : '$1'. The complete line is : $0")

}

Substitution

Substitution is possible:

$ipaddress = '192.168.0.15'

$class_c = regsubst($ipaddress, "(.*)\\..*", "\\1.0")

notify { $ipaddress: }

notify { $class_c: }

This will give me 192.168.0.15 and 192.168.0.0.

Notify and Require

These two functions are very useful once inserted into a manifest. This allows, for example, a service to say that it requires (require) a Package to function and a configuration file to notify (notify) a service if it changes so that it restarts the daemon. You can also write something like this:

Package["ntp"] -> File["/etc/ntp.conf"] ~> Service["ntp"]

- ->: means ‘require’

- ~>: means ’notify’

It’s also possible to do requires on classes :-)

The +> operator

Here’s a great operator that will save us some time. The example below:

file { "/etc/ssl/certs/cookbook.pem":

source => "puppet:///modules/apache/deimos.pem",

}

Service["apache2"] {

require +> File["/etc/ssl/certs/deimos.pem"],

}

Corresponds to:

service { "apache2":

enable => true,

ensure => running,

require => File["/etc/ssl/certs/deimos.pem"],

}

Checking software version number

If you need to check the version number of a software to make a decision, here’s a good example:

$app_version = "2.7.16"

$min_version = "2.7.18"

if versioncmp( $app_version, $min_version ) >= 0 {

notify { "Puppet version OK": }

} else {

notify { "Puppet upgrade needed": }

}

Virtual resources

Useful for test writings, you can, for example, create a resource by preceding it with an ‘@’. It will be read but not executed until you explicitly tell it to (realize). Example:

@package {

'postfix':

ensure => installed

}

realize( Package[''postfix] )

One of the big advantages of this method is that you can declare the realize in several places in your puppet master without having conflicts!

Advanced file deletion

You can request the deletion of a file after a given time or from a certain size:

tidy { "/var/lib/puppet/reports":

age => "1w",

size => "512k",

recurse => true,

}

This will cause the deletion of a folder after a week with its content.

Modules

It’s recommended to create modules for each service to make the configuration more flexible. This is part of certain best practices.

I’ll cover different techniques here trying to keep an increasing order of difficulty.

Initializing a module

So we’ll create the appropriate directory structure on the server. For this example, we’ll start with “sudo”, but you can choose something else if you want:

mkdir -p /etc/puppet/modules/sudo/manifests

touch /etc/puppet/modules/sudo/manifests/init.pp

Note that this is necessary for each module. The init.pp file is the first file that will load when the module is called.

The initial module (base)

We need to create an initial module that will manage the list of servers, the functions we’ll need, the roles, global variables… in short, it may seem a bit abstract at first but just know that we need a module to then manage all the others. We’ll start with this one which is one of the most important for the future.

As you now know, we need an init.pp file for the first module to be loaded. So we’ll create our directory structure which we’ll call “base”:

mkdir -p /etc/puppet/modules/base/{manifests,puppet/parser/functions}

init.pp

Then we’ll create and fill the init.pp file:

################################################################################

# BASE MODULES #

################################################################################

# Load defaults vars

import "vars.pp"

# Load functions

import "functions.pp"

# Load sysctl module

include "sysctl"

# Load network module

include "network"

# Load roles

import "roles.pp"

# Set servers properties

import "servers.pp"

The lines corresponding to import are equivalent to an “include” (in services like ssh or nrpe) of my other .pp files that we’ll create later. While the includes will load other modules that I’ll create later.

vars.pp

We’ll then create the vars.pp file which will contain all my global variables for my future modules or manifests (*.pp):

################################################################################

# VARS #

################################################################################

# Default admins emails

$root_email = 'xxx@mycompany.com'

# NTP Timezone. Usage :

# Look at /usr/share/zoneinfo/ and add the continent folder followed by the town

$set_timezone = 'Europe/Paris'

# Define empty exclude modules

$exclude_modules = [ ]

# Default LDAP servers

$ldap_servers = [ ]

# Default DNS servers

$dns_servers = [ '192.168.0.69', '192.168.0.27' ]

functions.pp

Now, we’ll create functions that will allow us to add some features not currently present in puppet or simplify some:

/*

Puppet Functions

Made by Pierre Mavro

*/

################################################################################

# GLOBAL FUNCTIONS #

################################################################################

# Load or not modules (include/exclude)

define include_modules () {

if ($exclude_modules == undef) or !($name in $exclude_modules) {

include $name

}

}

# Validate that pacakges are installed

define packages_install () {

notice("Installation of ${name} package")

package {

"${name}":

ensure => present

}

}

# Check that those services are enabled on boot or not

define services_start_on_boot ($enable_status) {

service {

"${name}":

enable => "${enable_status}"

}

}

# Add, remove, comment or uncomment lines

define line ($file, $line, $ensure = 'present') {

case $ensure {

default : {

err("unknown ensure value ${ensure}")

}

present : {

exec {

"echo '${line}' >> '${file}'" :

unless => "grep -qFx '${line}' '${file}'",

logoutput => true

}

}

absent : {

exec {

"grep -vFx '${line}' '${file}' | tee '${file}' > /dev/null 2>&1" :

onlyif => "grep -qFx '${line}' '${file}'",

logoutput => true

}

}

uncomment : {

exec {

"sed -i -e'/${line}/s/#\+//' '${file}'" :

onlyif => "test `grep '${line}' '${file}' | grep '^#' | wc -l` -ne 0",

logoutput => true

}

}

comment : {

exec {

"/bin/sed -i -e'/${line}/s/\(.\+\)$/#\1/' '${file}'" :

onlyif => "test `grep '${line}' '${file}' | grep -v '^#' | wc -l` -ne 0",

logoutput => true

}

}

# Use this resource instead if your platform's grep doesn't support -vFx;

# note that this command has been known to have problems with lines containing quotes.

# exec { "/usr/bin/perl -ni -e 'print unless /^\Q${line}\E$/' '${file}'":

# onlyif => "grep -qFx '${line}' '${file}'"

# }

}

}

# Validate that softwares are installed

define comment_lines ($filename) {

line {

"${name}" :

file => "${filename}",

line => "${name}",

ensure => comment

}

}

# Sysctl managment

class sysctl {

define conf ($value) {

# $name is provided by define invocation

# guid of this entry

$key = $name

$context = "/files/etc/sysctl.conf"

augeas {

"sysctl_conf/$key" :

context => "$context",

onlyif => "get $key != '$value'",

changes => "set $key '$value'",

notify => Exec["sysctl"],

}

}

file {

"sysctl_conf" :

name => $::operatingsystem ? {

default => "/etc/sysctl.conf",

},

}

exec {

"sysctl -p" :

alias => "sysctl",

refreshonly => true,

subscribe => File["sysctl_conf"],

}

}

# Function to add ssh public keys

define ssh_add_key ($user, $key) {

# Create users home directory if absent

exec {

"mkhomedir_${name}" :

path => "/bin:/usr/bin",

command => "cp -Rfp /etc/skel ~$user; chown -Rf $user:group ~$user",

onlyif => "test `ls ~$user 2>&1 >/dev/null | wc -l` -ne 0"

}

ssh_authorized_key {

"${name}" :

ensure => present,

key => "$key",

type => 'ssh-rsa',

user => "$user",

require => Exec["mkhomedir_${name}"]

}

}

# Limits.conf managment

define limits_conf ($domain = "root", $type = "soft",$item = "nofile", $value = "10000") {

# guid of this entry

$key = "$domain/$type/$item"

# augtool> match /files/etc/security/limits.conf/domain[.="root"][./type="hard" and ./item="nofile" and ./value="10000"]

$context = "/files/etc/security/limits.conf"

$path_list = "domain[.=\"$domain\"][./type=\"$type\" and ./item=\"$item\"]"

$path_exact = "domain[.=\"$domain\"][./type=\"$type\" and ./item=\"$item\" and ./value=\"$value\"]"

augeas {

"limits_conf/$key" :

context => "$context",

onlyif => "match $path_exact size==0",

changes => [

# remove all matching to the $domain, $type, $item, for any $value

"rm $path_list",

# insert new node at the end of tree

"set domain[last()+1] $domain",

# assign values to the new node

"set domain[last()]/type $type",

"set domain[last()]/item $item",

"set domain[last()]/value $value",],

}

}

So we have:

- Line 10: The ability to load or not load modules via an array sent as a function argument (as described earlier in this documentation)

- Line 17: The ability to verify that packages are installed on the machine

- Line 26: The ability to verify that services are correctly loaded at machine boot

- Line 34: The ability to ensure that a line in a file is present, absent, commented, or not commented

- Line 78: The ability to comment multiple lines via an array sent as a function argument

- Line 88: The ability to manage the sysctl.conf file

- Line 117: The ability to easily deploy SSH public keys

- Line 128: The ability to simply manage the limits.conf file

All these functions are of course not mandatory but greatly help with the use of puppet.

roles.pp

Then we have a file containing the roles of the servers. See it as groups to which we’ll subscribe the servers:

################################################################################

# ROLES #

################################################################################

# Level 1 : Minimal

class base::minimal

{

# Load modules

$minimal_modules =

[

'stdlib',

'puppet',

'resolvconf',

'packages_defaults',

'configurations_defaults',

'openssh',

'selinux',

'grub',

'kdump',

'tools',

'timezone',

'ntp',

'mysecureshell',

'openldap',

'acl',

'sudo',

'snmpd',

'postfix',

'nrpe'

]

include_modules{ $minimal_modules: }

}

# Level 2 : Cluster

class base::cluster inherits minimal

{

# Load modules

$cluster_modules =

[

'packages_cluster',

'configurations_cluster'

]

include_modules{ $cluster_modules: }

}

# Level 2 : Low Latency

class base::low_latency inherits minimal

{

# Load modules

$lowlatency_modules =

[

'low_latency'

]

include_modules{ $lowlatency_modules: }

}

# Level 3 : Low Latency + Cluster

class base::low_latency_cluster inherits minimal

{

include base::cluster

include base::low_latency

}

I’ve defined classes here that inherit from each other to varying degrees. It’s actually defined by levels. Level 3 depends on 2 and 1, 2 depends on 1, and 1 has no dependencies. This gives me a certain flexibility. For example, I know that if I load my cluster class, my minimal class will also be loaded. You’ll notice the ‘base::minimal’ annotation. It’s recommended to load your classes by calling the module, followed by ‘::’. This makes it much easier to read the manifests.

servers.pp

And finally, I have a file where I make my server declaration:

/*##############################################################################

# SERVERS #

################################################################################

== Automated Dependancies Roles ==

* cluster -> minimal

* low_latency -> minimal

* low_latency_cluster -> low_latency + cluster + minimal

== Template for servers ==

node /regex/

{

#$exclude_modules = [ ]

#$ldap_servers = 'x.x.x.x'

#$set_timezone = 'Europe/Paris'

#$dns_servers = [ ]

#include base::minimal

#include base::cluster

#include base::low_latency

#include base::low_latency_cluster

}

##############################################################################*/

# One server

node 'srv1.deimos.fr' {

$ldap_servers = [ '127.0.0.1' ]

include base::minimal

}

# Multiple servers

node 'srv2.deimos.fr' 'srv3.deimos.fr' {

$ldap_servers = [ '127.0.0.1' ]

include base::minimal

}

# Multiple regex based servers

node /srv-prd-\d+/ {

include base::minimal

include base::low_latency

$set_timezone = 'Europe/London'

}

Here I’ve put a server as an example or a regex for multiple servers. For info, the configuration can be integrated into LDAP.

Parser

Let’s create the necessary directory structure:

mkdir -p /etc/puppet/modules/base/puppet/parser/functions

Then add an empty parser that will allow us to detect if an array/variable is empty or not:

#

# empty.rb

#

# Copyright 2011 Puppet Labs Inc.

# Copyright 2011 Krzysztof Wilczynski

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

module Puppet::Parser::Functions

newfunction(:empty, :type => :rvalue, :doc => <<-EOS

Returns true if given array type or hash type has no elements or when a string

value is empty and false otherwise.

Prototype:

empty(x)

Where x is either an array, a hash or a string value.

For example:

Given the following statements:

$a = ''

$b = 'abc'

$c = []

$d = ['d', 'e', 'f']

$e = {}

$f = { 'x' => 1, 'y' => 2, 'z' => 3 }

notice empty($a)

notice empty($b)

notice empty($c)

notice empty($d)

notice empty($e)

notice empty($f)

The result will be as follows:

notice: Scope(Class[main]): true

notice: Scope(Class[main]): false

notice: Scope(Class[main]): true

notice: Scope(Class[main]): false

notice: Scope(Class[main]): true

notice: Scope(Class[main]): false

EOS

) do |*arguments|

#

# This is to ensure that whenever we call this function from within

# the Puppet manifest or alternatively form a template it will always

# do the right thing ...

#

arguments = arguments.shift if arguments.first.is_a?(Array)

raise Puppet::ParseError, "empty(): Wrong number of arguments " +

"given (#{arguments.size} for 1)" if arguments.size < 1

value = arguments.shift

unless [Array, Hash, String].include?(value.class)

raise Puppet::ParseError, 'empty(): Requires either array, hash ' +

'or string type to work with'

end

value.empty?

end

end

# vim: set ts=2 sw=2 et :

# encoding: utf-8

Module Examples

stdlib

This stdlib module is not essential, but it’s useful if you’re missing features in Puppet. Indeed, it brings a fairly large set of functions:

abs ensure_resource include loadyaml reverse to_bytes

bool2num err info lstrip rstrip type

capitalize extlookup inline_template md5 search unique

chomp fail is_array member sha1 upcase

chop file is_domain_name merge shellquote validate_absolute_pa

create_resources flatten is_float notice size validate_array

crit fqdn_rand is_hash num2bool sort validate_bool

debug fqdn_rotate is_integer parsejson squeeze validate_hash

defined generate is_ip_address parseyaml str2bool validate_re

defined_with_params get_module_path is_mac_address prefix str2saltedsha512 validate_slength

delete getvar is_numeric range strftime validate_string

delete_at grep is_string realize strip values

downcase has_key join regsubst swapcase values_at

emerg hash keys require time zip

empty

First create the directory structure:

mkdir -p /etc/puppet/modules/stdlib

Download the latest version and simply decompress it:

cd /etc/puppet/modules/stdlib

wget http://forge.puppetlabs.com/puppetlabs/stdlib/3.2.0.tar.gz

tar -xzf 3.2.0.tar.gz

mv puppetlabs-stdlib-3.2.0/stdlib stdlib

rm -f 3.2.0.tar.gz

Puppet

This one is quite funny because it’s simply the configuration of the Puppet client. However, it can be very useful for managing its own updates. So let’s create the directory structures:

mkdir -p /etc/puppet/modules/puppet/{manifests,files}

init.pp

We create the init.pp module here that will allow us to choose the file to load according to the OS.

/*

Puppet Module for Puppet

Made by Pierre Mavro

*/

class puppet {

# Check OS and request the appropriate function

case $::operatingsystem {

'RedHat' : {

include ::puppet::redhat

}

#'sunos': { include packages_defaults::solaris }

default : {

notice("Module ${module_name} is not supported on ${::operatingsystem}")

}

}

}

redhat.pp

/*

Puppet Module for Puppet

Made by Pierre Mavro

*/

class puppet::redhat {

# Change default configuration

file {

'/etc/puppet/puppet.conf' :

ensure => present,

source => "puppet:///modules/puppet/${::osfamily}.puppet.conf",

mode => 644,

owner => root,

group => root

}

# Disable service on boot and be sure it is not started

service {

'puppet-srv' :

name => 'puppet',

# Let this line commented if you're using Puppet Dashboard

#ensure => stopped,

enable => false

}

}

On line 9, we use a variable available in the facts (client-side) so that depending on the response, we load a file associated with the OS. So we’ll have a configuration file accessible via Puppet in the form ‘RedHat.puppet.conf’. Then, for the service, we make sure it’s properly stopped at startup and that it’s in an off state for now. In fact, I don’t want it to trigger and synchronize every 30 minutes (default value), I find it too dangerous and prefer to decide via other mechanisms (SSH, Mcollective…) when I want a synchronization to be done.

files

In files, we’ll have the basic configuration file that should apply to all RedHat type machines:

[main]

# The Puppet log directory.

# The default value is '$vardir/log'.

logdir = /var/log/puppet

# Where Puppet PID files are kept.

# The default value is '$vardir/run'.

rundir = /var/run/puppet

# Where SSL certificates are kept.

# The default value is '$confdir/ssl'.

ssldir = $vardir/ssl

# Puppet master server

server = puppet-prd.deimos.fr

# Add custom facts

pluginsync = true

pluginsource = puppet://$server/plugins

factpath = /var/lib/puppet/lib/facter

[agent]

# The file in which puppetd stores a list of the classes

# associated with the retrieved configuratiion. Can be loaded in

# the separate ``puppet`` executable using the ``--loadclasses``

# option.

# The default value is '$confdir/classes.txt'.

classfile = $vardir/classes.txt

# Where puppetd caches the local configuration. An

# extension indicating the cache format is added automatically.

# The default value is '$confdir/localconfig'.

localconfig = $vardir/localconfig

# Reporting

report = true

# Inspect reports for a compliance workflow

archive_files = true

resolvconf

I made this module to manage the resolv.conf configuration file. The usage is quite simple, it will retrieve the information of the DNS servers filled in the array available in vars.pp of the base module. So fill in the default DNS servers:

# Default DNS servers

$dns_servers = [ '192.168.0.69', '192.168.0.27' ]

You can override these values directly at the level of one or more nodes if you need to have specific configurations for certain nodes (in the servers.pp file of the base module):

# One server

node 'srv.deimos.fr' {

$dns_servers = [ '127.0.0.1' ]

include base::minimal

}

Let’s create the directory structure:

mkdir -p /etc/puppet/modules/resolvconf/{manifests,templates}

init.pp

/*

Resolv.conf Module for Puppet

Made by Pierre Mavro

*/

class resolvconf {

# Check OS and request the appropriate function

case $::operatingsystem {

'RedHat' : {

include resolvconf::redhat

}

#'sunos': { include packages_defaults::solaris }

default : {

notice("Module ${module_name} is not supported on ${::operatingsystem}")

}

}

}

redhat.pp

Here’s the configuration for Red Hat, I use a template file here, which will be filled with the information present in the $dns_servers array:

/*

Resolvconf Module for Puppet

Made by Pierre Mavro

*/

class resolvconf::redhat {

# resolv.conf file

file {

"/etc/resolv.conf" :

content => template("resolvconf/resolv.conf"),

mode => 744,

owner => root,

group => root

}

}

templates

And finally my resolv.conf template file:

# Generated by Puppet

domain deimos.fr

search deimos.fr deimos.lan

<% dns_servers.each do |dnsval| -%>

nameserver <%= dnsval %>

<% end -%>

Here we have a ruby loop that will go through the $dns_servers array and build the resolv.conf file by inserting line by line ’nameserver’ with the associated server.

Puppet: Configuration File Management Solution

packages_defaults

I use this module to install or uninstall packages that I absolutely need on all my machines. Let’s create the directory structure:

mkdir -p /etc/puppet/modules/packages_defaults/manifests

init.pp

class packages_defaults {

# Check OS and request the appropriate function

case $::operatingsystem {

'RedHat' : {

include ::packages_defaults::redhat

}

#'sunos': { include packages_defaults::solaris }

default : {

notice("Module ${module_name} is not supported on ${::operatingsystem}")

}

}

}

redhat.pp

I could have grouped everything into a single block, but for the sake of readability on the packages included in the distribution and those I added in a custom repository, I preferred to make a separation:

# Red Hat Defaults packages

class packages_defaults::redhat

{

# Set all default packages (embended in distribution) that need to be installed

packages_install

{ [

'nc',

'tree',

'telnet',

'dialog',

'freeipmi',

'glibc-2.12-1.80.el6.i686'

]: }

# Set all custom packages (not embended in distribution) that need to be installed

packages_install

{ [

'puppet',

'tmux'

]: }

}

configurations_defaults

This module, like the previous one, is used for the configuration of the OS delivered as standard. I actually want to make adjustments to parts of the pure system here, without really getting into a particular software. Let’s create the directory structure:

mkdir -p /etc/puppet/modules/configuration_defaults/{manifests,templates,lib/facter}

init.pp

class configurations_defaults {

import '*.pp'

# Configure common security parameters

include configurations_defaults::common

# Check OS and request the appropriate function

case $::operatingsystem {

'RedHat' : {

include configurations_defaults::redhat

}

default : {

notice("Module ${module_name} is not supported on ${::operatingsystem}")

}

}

}

Here I load all .pp files at startup, then call and import common configurations (common), then apply configurations specific to each OS.

common.pp

Here I want to have the same base motd file for all my machines. You’ll see later why it appears as a template:

class configurations_defaults::common

{

# Motd banner for all servers

file {

'/etc/motd':

ensure => present,

content => template("configurations_defaults/motd"),

mode => 644,

owner => root,

group => root

}

}

redhat.pp

I will load security options here, automatically configure bonding on my machines, and a sysctl option:

class configurations_defaults::redhat

{

# Security configurations

include 'configurations_defaults::redhat::security'

# Configure bonding

include 'configurations_defaults::redhat::network'

# Set sysctl options

sysctl::conf

{

'vm.swappiness': value => '0';

}

}

security.pp

You’ll see that this file does quite a lot:

class configurations_defaults::redhat::security inherits configurations_defaults::redhat

{

# Manage Root passwords $sha512_passwd='$6$lhkAz...'

$md5_passwd='$1$Fcwy...'

if ($::passwd_algorithm == sha512)

{

# sha512 root password

$root_password="$sha512_passwd"

}

else

{

# MD5 root password

$root_password="$md5_passwd"

}

user {

'root':

ensure => present,

password => "$root_password"

}

# Enable auditd service service {

"auditd" :

enable => true,

ensure => 'running',

}

# Comment unwanted sysctl lines $sysctl_file = '/etc/sysctl.conf'

$sysctl_comment_lines =

[

"net.bridge.bridge-nf-call-ip6tables",

"net.bridge.bridge-nf-call-iptables",

"net.bridge.bridge-nf-call-arptables"

]

comment_lines {

$sysctl_comment_lines :

filename => "$sysctl_file"

}

# Add security sysctl values sysctl::conf

{

'vm.mmap_min_addr': value => '65536';

'kernel.modprobe': value => '/bin/false';

'kernel.kptr_restrict': value => '1';

'net.ipv6.conf.all.disable_ipv6': value => '1';

}

# Deny kernel read to others users case $::kernel_security_rights {

'0': {

exec {'chmod_kernel':

command => 'chmod o-r /boot/{vmlinuz,System.map}-*'

}

}

'1' : {

notice("Kernel files have security rights")

}

}

# Change opened file descriptor value and avoid fork bomb by limiting number of process limits_conf {

"open_fd": domain => '*', type => '-', item => nofile, value => 2048;

"fork_bomb_soft": domain => '@users', type => soft, item => nproc, value => 200;

"fork_bomb_hard": domain => '@users', type => hard, item => nproc, value => 300;

}

}

Some explanations are needed:

- Manage Root passwords: We define the desired root password in md5 and sha1 form. Depending on what’s configured on the machine, it will configure the desired password. For this detection I use a facter (passwd_algorithm.rb)

- Enable auditd service: we make sure that the auditd service will start at boot and is currently running

- Comment unwanted sysctl lines: we ask that certain lines present in sysctl be commented if they exist

- Add security sysctl values: we add sysctl rules, and assign them a value

- Deny kernel read to others users: I created a facter here, which checks the rights of kernel files (kernel_rights.rb)

- Change opened file descriptor value: allows to use the limits_conf function to manage the limits.conf file. Here I’ve changed the default value of file descriptors and added a small security to avoid fork bombs.

facter

We’ll insert here the facters that will be used for certain functions requested above.

passwd_algorithm.rb

This facter will determine the algorithm used for authentication:

# Get Passwd Algorithm

Facter.add("passwd_algorithm") do

setcode do

Facter::Util::Resolution.exec("grep ^PASSWDALGORITHM /etc/sysconfig/authconfig | awk -F'=' '{ print $2 }'")

end

end

kernel_rights.rb

This facter will determine if any user has the right to read the kernels installed on the current machine:

# Get security rights

Facter.add(:kernel_security_rights) do

# Get kernel files where rights will be checked

kernel_files = Dir.glob("/boot/{vmlinuz,System.map}-*")

current_rights=1

# Check each files

kernel_files.each do |file|

# Get file mode

full_rights = sprintf("%o", File.stat(file).mode)

# Get last number (correponding to other rights)

other_rights = Integer(full_rights) % 10

# Check if other got read rights

if other_rights >= 4

current_rights=0

end

end

setcode do

# Set kernel_security_rights to 1 if read value is detected

current_rights

end

end

get_network_infos.rb

This facter allows to retrieve the current ip on eth0, the netmask and the gateway:

# Get public IP address

Facter.add(:public_ip) do

setcode do

# Get bond0 ip if exist

if File.exist? "/proc/sys/net/ipv4/conf/bond0"

Facter::Util::Resolution.exec("ip addr show dev bond0 | awk '/inet/{print $2}' | head -1 | sed 's/\\/.*//'")

else

# Or eth0 ip if exist

if File.exist? "/proc/sys/net/ipv4/conf/eth0"

Facter::Util::Resolution.exec("ip addr show dev eth0 | awk '/inet/{print $2}' | head -1 | sed 's/\\/.*//'")

else

# Else return error

'unknow (puppet issue)'

end

end

end

end

# Get netmask on the fist interface

Facter.add(:public_netmask) do

setcode do

# Get bond0 netmask if exist

if File.exist? "/proc/sys/net/ipv4/conf/bond0"

Facter::Util::Resolution.exec("ifconfig bond0 | awk '/inet/{print $4}' | sed 's/.*://'")

else

# Or eth0 netmask if exist

if File.exist? "/proc/sys/net/ipv4/conf/eth0"

Facter::Util::Resolution.exec("ifconfig eth0 | awk '/inet/{print $4}' | sed 's/.*://'")

else

# Else set a default netmask

'255.255.255.0'

end

end

end

end

# Get default gateway

Facter.add(:default_gateway) do

setcode do

Facter::Util::Resolution.exec("ip route | awk '/default/{print $3}'")

end

end

network.pp

Here we will load the bonding configuration and other network-related things:

class configurations_defaults::redhat::network inherits configurations_defaults::redhat

{

# Disable network interface renaming augeas {

"grub_udev_net" :

context => "/files/etc/grub.conf",

changes => "set title[1]/kernel/biosdevname 0"

}

# Load bonding module at boot line {

'load_bonding':

file => '/etc/modprobe.d/bonding.conf',

line => 'alias bond0 bonding',

ensure => present

}

# Bonded master interface - static network::bond::static {

"bond0" :

ipaddress => "$::public_ip",

netmask => "$::public_netmask",

gateway => "$::default_gateway",

bonding_opts => "mode=active-backup",

ensure => "up"

}

# Bonded slave interface - static

network::bond::slave {

"eth0" :

macaddress => $::macaddress_eth0,

master => "bond0",

}

# Bonded slave interface - static

network::bond::slave {

"eth1" :

macaddress => $::macaddress_eth1,

master => "bond0",

}

}

- Disable network interface renaming: we add an argument and set its value to 0 in grub so that it doesn’t rename the interfaces and leaves them as ethX. I wrote an article about this if you’re interested.

- Load bonding module at boot: We make sure that the bonding module will be loaded at boot time and that an alias on bond0 exists

- Bonded interfaces: I refer you to the bonding module available on Puppet Forge, as well as the documentation on bonding if you don’t know what it is. I also created a facter (get_network_infos.rb) for this to retrieve the public interface (eth0, on which I would connect), the netmask and gateway already present and configured on the machine

templates

We had talked about it earlier, we manage a template for motd to display, in addition to a text, the hostname of the machine you connect to (line 16):

================================================================================

This is an official computer system and is the property of Deimos. It

is for authorized users only. Unauthorized users are prohibited. Users

(authorized or unauthorized) have no explicit or implicit expectation of

privacy. Any or all uses of this system may be subject to one or more of

the following actions: interception, monitoring, recording, auditing,

inspection and disclosing to security personnel and law enforcement

personnel, as well as authorized officials of other agencies, both domestic

and foreign. By using this system, the user consents to these actions.

Unauthorized or improper use of this system may result in administrative

disciplinary action and civil and criminal penalties. By accessing this

system you indicate your awareness of and consent to these terms and

conditions of use. Discontinue access immediately if you do not agree to

the conditions stated in this notice.

================================================================================

<%= hostname %>

OpenSSH - 1

Here’s a first example for OpenSSH. Let’s create the directory structure:

mkdir -p /etc/puppet/modules/openssh/{manifests,templates,lib/facter}

init.pp

/*

OpenSSH Module for Puppet

Made by Pierre Mavro

*/

class openssh {

# Check OS and request the appropriate function

case $::operatingsystem {

'RedHat' : {

include openssh::redhat

}

#'sunos': { include packages_defaults::solaris }

default : {

notice("Module ${module_name} is not supported on ${::operatingsystem}")

}

}

}

redhat.pp

Here we load everything we need, then load the common because OpenSSH needs to be installed and configured before moving on to the common part:

/*

OpenSSH Module for Puppet

Made by Pierre Mavro

*/

class openssh::redhat {

# Install ssh package

package {

'openssh-server' :

ensure => present

}

# SSHd config file

file {

"/etc/ssh/sshd_config" :

source => "puppet:///modules/openssh/sshd_config.$::operatingsystem",

mode => 600,

owner => root,

group => root,

notify => Service["sshd"]

}

service {

'sshd' :

enable => true,

ensure => running,

require => File['/etc/ssh/sshd_config']

}

include openssh::common

}

In the service part, there is important information (require), which will restart the service if the configuration file changes.

common.pp

Here, we make sure that the folder where SSH keys are stored is present with the right permissions, then we call another file that will contain all the keys we want to export:

/*

OpenSSH Module for Puppet

Made by Pierre Mavro

*/

class openssh::common {

# Check that .ssh directory exist with correct rights

file {

"$::home_root/.ssh" :

ensure => directory,

mode => 0700,

owner => root,

group => root

}

# Load all public keys

include openssh::ssh_keys

}

The ‘home_root’ directive is generated from a facter provided below.

facter

Here’s the facter that allows you to retrieve the home of the root user:

# Get Home directory

Facter.add("home_root") do

setcode do

Facter::Util::Resolution.exec("echo ~root")

end

end

ssh_keys.pp

Here I add the keys, both for access from other servers or users:

/*

OpenSSH Module for Puppet

Made by Pierre Mavro

*/

class openssh::ssh_keys inherits openssh::common {

###################################

# Servers

###################################

# Puppet master

ssh_add_key {