Configuration and Usage of Solaris Zones (Containers)

Introduction

Zones or Containers are:

- Virtual instance of Solaris

- Software partition for the OS

A large SunFire server with hardware domains allows many isolated systems to be created. Zones achieve this in software and is far more flexible - it is easy to move individual CPUs between zones as needed, or to configure a more sophisticated way to share CPUs and memory.

Configuration

There are two general zone types to pick from during zone creation. They are,

- Small zone - (also known as a “Sparse Root zone”): The default. This consumes the least disk space, has the best performance and the best security.

- Big zone - (also known as a “Whole Root zone”): The zone has its own /usr files, which can be modified independently.

If you aren’t sure which to choose, pick the small zone. Below are examples of installing each zone type as a starting point for Zone Resource Controls.

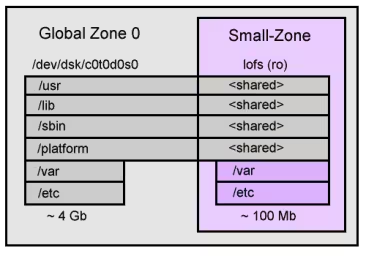

Small Zone

This demonstrates creating a simple zone that uses the default settings which share most of the operating system with the global zone. The final layout will be like the following,

To create such a zone involves letting the system pick default settings, which includes the loopback filesystem (lofs) read only mounts that share most of the OS. The following commands were used,

zonecfg -z small-zone

small-zone: No such zone configured

Use 'create' to begin configuring a new zone.

zonecfg:small-zone> create

zonecfg:small-zone> set autoboot=true

zonecfg:small-zone> set zonepath=/export/small-zone

zonecfg:small-zone> add net

zonecfg:small-zone:net> set address=192.168.2.101

zonecfg:small-zone:net> set physical=hme0

zonecfg:small-zone:net> end

zonecfg:small-zone> info

zonepath: /export/small-zone

autoboot: true

pool:

inherit-pkg-dir:

dir: /lib

inherit-pkg-dir:

dir: /platform

inherit-pkg-dir:

dir: /sbin

inherit-pkg-dir:

dir: /usr

net:

address: 192.168.2.101

physical: hme0

zonecfg:small-zone> verify

zonecfg:small-zone> commit

zonecfg:small-zone> exit

zoneadm list -cv

ID NAME STATUS PATH

0 global running /

- small-zone configured /export/small-zone

The new zone is in a configured state. Those inherited-pkg-dir’s are filesystems that will be shared lofs (loopback filesystem) readonly from the global; this saves copying the entire operating system over during install, but can make adding packages to the small-zone difficult as /usr is readonly. (See the big-zone example that uses a different approach).

We can see the zonecfg command has saved the info to an XML file in /etc/zones:

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE zone PUBLIC "-//Sun Microsystems Inc//DTD Zones//EN" "file:///usr/share/lib/xml/dtd/zonecfg.dtd.1">

<!--

DO NOT EDIT THIS FILE. Use zonecfg(1M) instead.

-->

<zone name="small-zone" zonepath="/export/small-zone" autoboot="true">

<inherited-pkg-dir directory="/lib"/>

<inherited-pkg-dir directory="/platform"/>

<inherited-pkg-dir directory="/sbin"/>

<inherited-pkg-dir directory="/usr"/>

<network address="192.168.2.101" physical="hme0"/>

</zone>

Next we begin the zone install, it takes around 10 minutes to initialise the packages it needs for the new zone. A verify is run first to check our zone config is ok, then we run the install, then boot the zone:

mkdir /export/small-zone

chmod 700 /export/small-zone

zoneadm -z small-zone verify

zoneadm -z small-zone install

Preparing to install zone <small-zone>.

Creating list of files to copy from the global zone.

Copying <2574> files to the zone.

Initializing zone product registry.

Determining zone package initialization order.

Preparing to initialize <987> packages on the zone.

Initialized <987> packages on zone.

Zone <small-zone> is initialized.

Installation of these packages generated warnings: <SUNWcsr SUNWdtdte>

The file </export/small-zone/root/var/sadm/system/logs/install_log> contains a log of the zone installation.

zoneadm list -cv

ID NAME STATUS PATH

0 global running /

- small-zone installed /export/small-zone

zoneadm -z small-zone boot

zoneadm list -cv

ID NAME STATUS PATH

0 global running /

1 small-zone running /export/small-zone

We can see small-zone is up and running. Now we login for the first time to the console, so we can answer system identification questions such as timezone,

zlogin -C small-zone

[Connected to zone 'small-zone' console]

100/100

What type of terminal are you using?

1) ANSI Standard CRT

2) DEC VT52

3) DEC VT100

4) Heathkit 19

5) Lear Siegler ADM31

6) PC Console

7) Sun Command Tool

8) Sun Workstation

9) Televideo 910

10) Televideo 925

11) Wyse Model 50

12) X Terminal Emulator (xterms)

13) CDE Terminal Emulator (dtterm)

14) Other

Type the number of your choice and press Return: 13

...standard questions...

The system then reboots. To get an idea of what this zone actually is, lets poke around it’s zonepath from the global zone,

/> cd /export/small-zone

/export/small-zone> ls

dev root

/export/small-zone> cd root

/export/small-zone/root> ls

bin etc home mnt opt proc system usr

dev export lib net platform sbin tmp var

/export/small-zone/root> grep lofs /etc/mnttab

/export/small-zone/dev /export/small-zone/root/dev lofs zonedevfs,dev=4e40002 1110446770

/lib /export/small-zone/root/lib lofs ro,nodevices,nosub,dev=2200008 1110446770

/platform /export/small-zone/root/platform lofs ro,nodevices,nosub,dev=2200008 1110446770

/sbin /export/small-zone/root/sbin lofs ro,nodevices,nosub,dev=2200008 1110446770

/usr /export/small-zone/root/usr lofs ro,nodevices,nosub,dev=2200008 1110446770

/export/small-zone/root> du -hs etc var

38M etc

30M var

/export/small-zone/root>

From the directories that are not lofs shared from the global zone, the main ones are /etc and /var. They add up to around 70Mb, which is roughly how much extra disk space was required to create this small-zone.

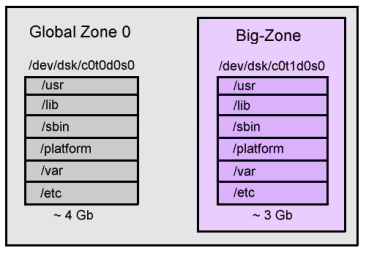

Big Zones

This demonstrates creating a zone that resides on it’s own slice, which has it’s own copy of the operating system. The final layout will be like the following:

First we create the slice:

newfs /dev/dsk/c0t1d0s0

newfs: construct a new file system /dev/rdsk/c0t1d0s0: (y/n)? y

/dev/rdsk/c0t1d0s0: 16567488 sectors in 16436 cylinders of 16 tracks, 63 sectors

8089.6MB in 187 cyl groups (88 c/g, 43.31MB/g, 5504 i/g)

super-block backups (for fsck -F ufs -o b=#) at:

32, 88800, 177568, 266336, 355104, 443872, 532640, 621408, 710176, 798944,

15700704, 15789472, 15878240, 15967008, 16055776, 16144544, 16233312,

16322080, 16410848, 16499616,

ed /etc/vfstab

362

$a

/dev/dsk/c0t1d0s0 /dev/rdsk/c0t1d0s0 /export/big-zone ufs 1 yes -

.

w

455

q

mkdir /export/big-zone

mountall

checking ufs filesystems

/dev/rdsk/c0t1d0s0: is clean.

mount: /tmp is already mounted or swap is busy

Now we configure the zone to not use any inherit-pkg-dir’s by using the “-b” option.

$ zonecfg -z big-zone

big-zone: No such zone configured

Use 'create' to begin configuring a new zone.

zonecfg:big-zone> create -b

zonecfg:big-zone> set autoboot=true

zonecfg:big-zone> set zonepath=/export/big-zone

zonecfg:big-zone> add net

zonecfg:big-zone:net> set address=192.168.2.201

zonecfg:big-zone:net> set physical=hme0

zonecfg:big-zone:net> end

zonecfg:big-zone> info

zonepath: /export/big-zone

autoboot: true

pool:

net:

address: 192.168.2.201

physical: hme0

zonecfg:big-zone> verify

zonecfg:big-zone> commit

zonecfg:big-zone> exit

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE zone PUBLIC "-//Sun Microsystems Inc//DTD Zones//EN" "file:///usr/share/lib/xml/dtd/zonecfg.dtd.1">

<zone name="big-zone" zonepath="/export/big-zone" autoboot="true">

<network address="192.168.2.201" physical="hme0"/>

</zone>

chmod 700 /export/big-zone

df -h /export/big-zone

Filesystem size used avail capacity Mounted on

/dev/dsk/c0t1d0s0 7.8G 7.9M 7.7G 1% /export/big-zone

zoneadm -z big-zone verify

zoneadm -z big-zone install

Preparing to install zone .

Creating list of files to copy from the global zone.

Copying <118457> files to the zone.

...

After the zone has been installed and booted, we now check the size of the dedicated zone slice,

df -h /export/big-zone

Filesystem size used avail capacity Mounted on

/dev/dsk/c0t1d0s0 7.8G 2.9G 4.8G 39% /export/big-zone

Wow! 2.9Gb, pretty much most of Solaris 10. This zone resides on it’s own slice, and can add many packages as though it was a separate system. Using inherit-pkg-dir as happened with small-zone can be great, but it’s good to know we can do this as well.

Management

View or list all running zones

The zoneadm command can be used to list active or running zones.

To view a list and brief status information about running zones, use the following command from the global zone:

zoneadm list -vc

ID NAME STATUS PATH

0 global running /

2 zone2 running /opt/zone2

The -v option provides the additional information other than the zone name.

Shutdown or stop a zone

To boot a Solaris 10 zone called testzone, use the following command as root in the global zone:

zoneadm -z testzone boot

You can watch the system boot by logging into the zone’s console:

zlogin -C testzone

Uninstall and delete a zone

When you want to remove a non-global zone from your Solaris 10 installation, you’ll need to follow the following steps.

If you want to completely remove a zone called ’testzone’ from your system, login to the global zone and become root. The first command is the opposite of the ‘install’ option of zoneadm and deletes all of the files under the zonepath:

zoneadm -z testzone uninstall

At this point, the zone is in the configured state. To remove it completely from the system use:

zonecfg -z testzone delete

There is no undo, so make sure this is what you want to do before you do it.

Resource Control

Use cpu-shares to control zone computing resources

Although the Solaris 10 08/07 OS allows you to specify how many CPUs can be used in a zone, sometimes this does not work out well. For example, I use dedicated-cpu for three zones in an 8-core Sun Fire T2000 server. Each zone has 4-20 specified for ncpus with a different importance value. However, when the system is fully utilized, the importance value does not always play its role. Sometimes, a zone with a lower importance value consumes a higher percentage of the computing resources than a zone with higher importance.

In the following, I demonstrate that cpu-shares works well.

root@bigfoot# dispadmin -d

FSS (Fair Share)

root@bigfoot# zonecfg -z global info rctl

rctl:

name: zone.cpu-shares

value: (priv=privileged,limit=4,action=none)

root@bigfoot# zonecfg -z bighead info rctl name=zone.cpu-shares

rctl:

name: zone.cpu-shares

value: (priv=privileged,limit=3,action=none)

root@bigfoot# zonecfg -z bighand info rctl name=zone.cpu-shares

rctl:

name: zone.cpu-shares

value: (priv=privileged,limit=3,action=none)

Generate 20 processes in zone bighead:

<username>@bighead> perl -e 'while (--$ARGV[0] and fork) {}; while () {}'

20 &

Generate 12 processes in zone bighand:

<username>@bighand> perl -e 'while (--$ARGV[0] and fork) {}; while () {}'

12 &

root@bigfoot# vmstat 3 3

kthr memory page disk faults

cpu

r b w swap free re mf pi po fr de sr s1 s2 s3 s4 in sy

cs us sy id

4 0 0 37954888 15215072 66 206 259 1 1 0 60 13 -0 -0 24 818 4186

1780 64 0 36

0 0 0 38747216 15152224 0 5 0 0 0 0 0 0 0 0 0 768 272

339 100 0 0

0 0 0 38746960 15151968 0 0 0 0 0 0 0 0 0 0 0 788 247

347 100 0 0

root@bigfoot# prstat -Z

ZONEID NPROC SWAP RSS MEMORY TIME CPU ZONE

1 55 202M 264M 1.6% 0:36:11 62% bighead

2 47 199M 263M 1.6% 0:20:29 37% bighand

0 49 219M 291M 1.8% 0:01:36 0.1% global

As we see, when the system is not fully utilized, each zone uses as many computing resources as it needs.

Now, we will generate 15 processes in zone bigfoot to see how the bighead and bighand zones consume the computing resources:

root@bigfoot# perl -e 'while (--$ARGV[0] and fork) {}; while () {}'

15 &

root@bigfoot# vmstat 3 3

kthr memory page disk faults

cpu

r b w swap free re mf pi po fr de sr s1 s2 s3 s4 in sy

cs us sy id

5 0 0 37520616 15249888 102 314 406 1 1 0 94 13 -0 -0 24 869 6466

2592 50 1 49

15 0 0 38745928 15151392 0 5 0 0 0 0 0 1 0 0 0 806 325

366 100 0 0

15 0 0 38745672 15151136 0 0 0 0 0 0 0 0 0 0 0 739 234

320 100 0 0

root@bigfoot# prstat -Z

ZONEID NPROC SWAP RSS MEMORY TIME CPU ZONE

0 65 228M 298M 1.8% 1:32:55 40% global

1 55 202M 264M 1.6% 1:59:48 30% bighead

2 47 199M 263M 1.6% 1:37:32 29% bighand

root@bigfoot# prstat -Z

ZONEID NPROC SWAP RSS MEMORY TIME CPU ZONE

0 65 228M 298M 1.8% 1:19:15 38% global

2 47 199M 263M 1.6% 1:27:31 31% bighand

1 55 202M 264M 1.6% 1:48:24 31% bighead

As we see, each zone is consuming a portion of the computing resources according to its cpu-shares value when the system’s computing resources are fully utilized.

The swap property of capped-memory is virtual swap space, not physical swap space

For zone bighead running Oracle Database 10g Enterprise Edition with total memory of 2 Gbytes (1.5 Gbytes System Global Area [SGA] and 0.5 Gbytes Process Global area [PGA]), we might just give a maximum of 3 Gbytes memory and 1.5 Gbytes swap space, as follows:

zonecfg:bighead> info capped-memory

capped-memory:

physical: 3G

[swap: 1.5G]

Start up the Oracle database in zone bighead:

oracle@bighead> sqlplus /nolog

SQL> conn / as sysdba

SQL& startup

ORA-27102: out of memory

SVR4 Error: 12: Not enough space

So the swap here is not physical swap space. Based on Sun documents, swap here means the total amount of swap that can be consumed by user process address space mappings and tmpfs mounts for this zone. When we set up swap, the capped-memory swap should be set proportionately. For example:

<username>@bigfoot> vmstat -p 5

memory page executable anonymous

filesystem

swap free re mf fr de sr epi epo epf api

apo apf fpi fpo fpf

38671464 15156336 40 77 1 0 5 1442 0 0 242

0 0 44 1 1

38875352 15312016 0 3 0 0 0 0 0 0 0

0 0 0 0 0

In our case, it should be 3 * ( 38 / 15 ), which equals 7 Gbytes.

Sometimes, a zone consumes more physical memory than the maximum limit

zonecfg:bighead> info capped-memory

capped-memory:

physical: 1G

[swap: 7G]

[locked: 1G]

The Oracle database took a while to start up. The Resident Set Size (RSS) memory consumed by the zone fluctuated around, as follows:

# prstat -Z

ZONEID NPROC SWAP RSS MEMORY TIME CPU ZONE

36 54 1824M 158M 1.0% 0:01:54 0.5% bighead

36 54 1824M 1779M 11% 0:01:59 0.3% bighead

36 55 1828M 258M 1.6% 0:02:01 0.6% bighead

36 55 1829M 1788M 11% 0:02:13 0.3% bighead

But 1779 Mbytes is much more than 1 Gbyte. Sun is aware of this known bug.

FAQ

How to exit zlogin

To exit zlogin, there is a default sequence to do:

~.

If you want to personalize this sequence, use -e option while launching zlogin command:

zlogin -C -e @ big-zone

Adding a file system to a running zone

I needed to add a second file system to one of my Solaris 10 zones this morning, and needed to do so without rebooting the zone. Since the global zone uses loopback mounts to present file systems to zones, adding a new file system was as easy as loopback mounting the file system into the zone’s file system:

mount -F lofs /filesystems/zone1oracle03 /zones/zone1/root/ora03

Once the file system was mounted, I added it to the zone configuration and then verified it was mounted:

$ mount

Now to update my ASM disk group to use the storage.

References

http://www.solarisinternals.com/wiki/index.php/Zones

http://www.sun.com/bigadmin/content/zones/

Manage Easily Pools with Kpool GUI

http://www.sun.com/bigadmin/content/submitted/zone_resource_control.jsp

Assigning System Resources to Solaris 10 Zones Without Reboot

http://prefetch.net/blog/index.php/2009/04/12/adding-a-file-system-to-a-running-zone/

Last updated 11 Dec 2009, 21:43 +0200.